Broadcom (AVGO)

Q4 Results

As we have been travelling for a few weeks, we have missed many quarterly results announcements and we need to revisit some of them starting with Broadcom (AVGO).

We have written on Broadcom on a number of occasions and some of these reports can be found here and here.

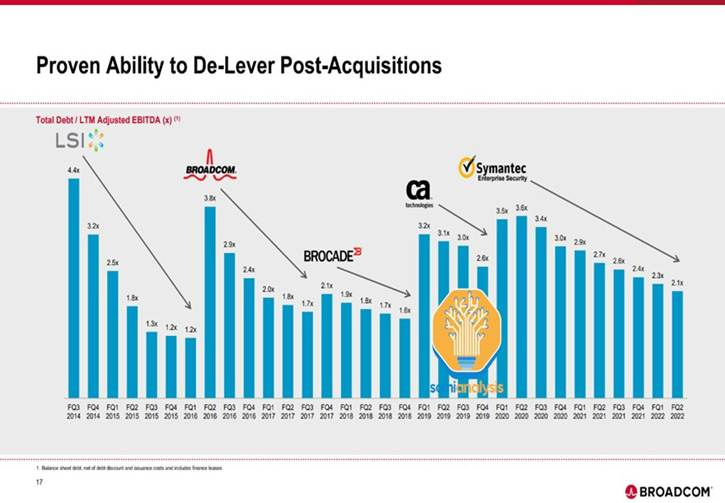

It is a large technology conglomerate which has been built up over many years using debt finance acquisitions. The company has demonstrated an impressive ability to deleverage after each acquisition and repeat the cycle.

In recent years, with the rise of AI, their semiconductor solutions division has grown especially strongly. In our first report on the company on 17th June 2024, we noted the following:

In semiconductors, they develop semiconductor devices with a focus on complex digital and mixed signal complementary metal oxide semiconductor (CMOS) based devices and analogue III-V based products.

One of the key products seeing huge demand in the GenAI boom are GPU Accelerators. The generally accepted definition of an accelerator is “A purpose-built design that accompanies a processor for accelerating a specific function or workload (also sometimes called “co-processors”)”. Since General Processors (GPUs) are designed to handle a wide range of workloads, processor architectures are rarely the most optimal for specific functions or workloads.”

A GPU accelerator is “hardware that is optimized for doing the computations for three-dimensional computer graphics.”

Broadcom does not compete with Nvidia as it does not design and supply GPUs. Instead, it supplies GPU accelerators which help GPUs work better for specialised tasks.

Broadcom’s rising accelerator sales are to some significant degree currently driven by Google’s aggressive ramp up of its custom developed Tensor Processing Units (TPU)

Google’s website describes TPU as follows:

“TPUs are Google’s custom-developed application-specific integrated circuits (ASICs) used to accelerate machine learning workloads. Cloud TPU is a web service that makes TPUs available as scalable computing resources on Google Cloud.”

“TPUs train your models more efficiently using hardware designed for performing large matrix operations often found in machine learning algorithms. TPUs have on-chip high-bandwidth memory (HBM) letting you use larger models and batch sizes. TPUs can be connected in groups called Pods that scale up your workloads with little to no code changes.”

A large part of the new workloads that will move to the Cloud in the next few years will be GenAI driven. The large Cloud Service Providers (CSPs) plus Meta Platforms are investing heavily in datacentres. Those datacentres need GPUs (Nvidia, AMD, Intel) and Accelerators (Broadcom, Marvell) as well networking (Nvidia, Arista, Cisco) and Software (Nvidia, AMD, Intel).

The results were very strong and the stock traded up in response. However, the post earnings conference call seemed to unsettle the markets and stock fell 10%.

Summary of Results

The results were quite strong and better than expected.

Keep reading with a 7-day free trial

Subscribe to Long-term Investing to keep reading this post and get 7 days of free access to the full post archives.