We were travelling in Southern India for the last two weeks and did not track the market over the period. We are now catching up with some of companies who have reported quarterly results staring with Meta Platforms Inc (Meta). We have written about Meta many times and the most recent report can be found here.

Meta’s results were above analysts’ expectations.

Meta reported Q4 EPS of $8.02 per share compared with $5.33 in the same quarter last year. The mean analysts’ expectation was $6.77 per share.

Revenue rose 20.6% to $48.39bn compared with analysts’ expectations of $47.04bn.

The company reported quarterly net income of $20.84bn.

The earnings numbers were released in the same week as the launch of Chinese company DeepSeek's AI models, which seemed to have achieved similar results as US big tech models, but at a fraction of the cost. The Meta CEO said this event reinforced his conviction that open source AI is the right strategy.

"There's going to be an open source standard globally," Mark Zuckerberg said and added "It's important that it's an American standard."

DeepSeek has said its models either match or outperform top U.S. rivals at a fraction of the cost, including Meta's own Llama models, challenging the prevailing view that scaling AI requires vast computing power and investment.

This raises fresh questions about Meta's AI capital spending. It also applies to Microsoft, Amazon, Google and Oracle among others. Meta announced that it plans capex of as much as $65bn in 2025 to expand its AI infrastructure. The “big five” collective capital expenditure in 2025 is expected to rise to about $230bn. The fear is this scale of investment could be too high and it will generate low returns and may have to be written off to a great extent.

Meta investors appear not to be too concerned as it has the earnings and cash flow to easily fund this scale of capex. Meta relies on its core social media ads business (it is almost 100% of revenues) to cover its AI and its "metaverse" investments- the latter includes smart glasses and augmented reality systems.

The Daily Active Users (DAU), a metric Meta uses to track unique users who open any one of its apps in a day, rose about 5% from a year earlier to 3.35bn which is 50% of the global human population (!). The audience for the core advertising business is very large and continues to grow.

Zuckerberg said it was too early to tell how DeepSeek's emergence globally will impact Meta's investment and capital expenditure strategy, while noting that Meta's AI teams were already integrating the Chinese company's insights into their plans.

Meta continues to lose money at its metaverse unit called Reality Labs unit, which beat sales expectations but lost about $5bn in Q4.

Meta — among the top buyers of Nvidia's AI chips — aims to end the year with over 1.3mn GPUs and bring about 1GW of computing power online.

The company would continue buying such chips while also working on internally designed custom silicon. Meta aims to use its own chips to train its AI ranking and recommendations systems - which determine what appears in users' feeds - by 2026.

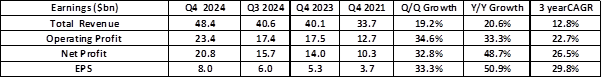

Detailed Q4 numbers

The Q4 numbers show the business continue to be very strong as it continues to grow revenues strongly, increase margins, grow profits and boost cashflows meaningfully.

This was a strong set of expectations beating numbers with total revenues growing 20.6% (y/y) and operating revenues at 33.3% (y/y). EPS growth was 50.9%. This indicates continued strong operating leverage in the business.

Family Of Apps (FOA) Revenues grew at 21%. DAU, already at a high level, grew another 5% (y/y) to 3.35bn. The impressive 29% growth in average revenue per person (ARPP) suggests the company is getting even better at monetising its vast user base.

Margins grew impressively on a y/y basis with operating margins 540bps higher. One reason is a 13-percentage point favourable impact on expenses growth from legal accrual reductions in Q4 and lower year-over-year restructuring costs. These likely to be a one-off and margins may stabilise or decline a little going forward.

The concern is not with the existing business but with the huge and growing investments in the “Metaverse” and in AI, which are taking up more than 50% (and rising) of the operating cash flow.

Highlights of earnings conference call

The company believes the next twelve months will be a critical period.

This is going to be a really big year. I know it always feels like every year is a big year, but more than usual it feels like the trajectory for most of our long-term initiatives is going to be a lot clearer by the end of this year. I keep telling our teams that this is going to be intense. We have about 48-weeks to get on the trajectory we want to be on.

One key reason is that it is expected to be a key year for the widespread diffusion of AI.

This is going to be the year when a highly intelligent and personalized AI assistant reaches more than 1bn people, and I expect Meta AI to be that leading AI assistant. Meta AI is already used by more people than any other assistant, and once a service reaches that kind of scale it usually develops a durable long-term advantage. We have a really exciting roadmap for this year with a unique vision focused on personalization.

I expect that 2025 will be the year when it becomes possible to build an AI engineering agent that has coding and problem-solving abilities of around a good mid-level engineer. And this is going to be a profound milestone and potentially one of the most important innovations in history, as well as over time, potentially a very large market. Whichever company builds this first I think is going to have a meaningful advantage in deploying it to advance their AI research and shape the field.

We expect to bring online almost 1GW of capacity this year, and we're building a 2GW and potentially bigger AI datacentre, that is so big that it will cover a significant part of Manhattan if it were placed there.

We're planning to fund all this by at the same time investing aggressively in initiatives that use these AI advances to increase revenue growth.

The problem is the range of AI products is already very large and developing extremely rapidly.

Meta AI is in a crowded fast-developing field.

Meta is assuming there will be room for a number of AI models depending on the specific use case.

People want their AI to be personalized to their context, their interests, their personality, their culture, and how they think about the world. I don't think that there's just going to be one big AI that everyone uses that does the same thing. People are going to get to choose how their AI works and what it looks like for them.

Meta’s Large Language Model (LLM) family is called Llama. They claim Llama is Open source in that the model weights (parameters that enable the model to generate predictions) are publicly available under a license that permits commercial use. However, a purist would argue that true open-source AI requires transparency in training data, source code, and model architecture and Meta falls short in this regard.

This very well be the year when Llama and open source become the most advanced and widely used AI models as well. Llama 4 is making great progress in training. Llama 4 mini is done with pre-training and our reasoning models and larger model are looking good too. Our goal with Llama 3 was to make open source competitive with closed models, and our goal for Llama 4 is to lead. Llama 4 will be natively multimodal; it's an omni-model and will have agentic capabilities, so it's going to be novel and it’s going to unlock a lot of new use cases.

The company believes their smart glasses are proving to be successful consumer product and they will become the key way in which users will interact with AI

Our Ray-Ban Meta AI glasses are a real hit, and this will be the year when we understand the trajectory for AI glasses as a category. Many breakout products in the history of consumer electronics have sold 5mn to 10mn units in their third generation. This will be a defining year that determines if we're on a path towards many hundreds of millions and eventually billions of AI glasses and glasses being the next computing platform like we've been talking about for some time or if this is just going to be a longer grind.

I've said for a while that I think that glasses are the ideal form factor for an AI device, because you can let an AI assistant on your glasses see what you see and hear what you hear, which gives it the context to be able to understand everything that's going on in your life that you would want to talk to it about and get context on.

I think the glasses are going to be a very important computing platform in the future. When phones became the primary computing platform, it's not like computers went away.I think we'll have phones for some time. It's kind of hard for me to imagine that a decade or more from now, all the glasses aren't going to basically be AI glasses, as well as a lot of people who don't wear glasses today, finding that to be a useful thing.

They report continued progress in the Family of Apps (FOA).

I expect Threads (a rival to Twitter/X) continue on its trajectory to become the leading discussion platform and eventually reach 1bn people over the next several years. Threads now has more than 320mn monthly actives and has been adding more than 1mn sign-ups per day.

I expect WhatsApp to continue gaining share and making progress towards becoming the leading messaging platform in the U.S. like it is in a lot of the rest of the world. WhatsApp now has more than 100mn monthly actives in the U.S.

Facebook is used by more than 3bn monthly actives and we're focused on growing its cultural influence.

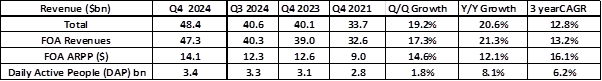

Geographically, advertising growth has been strong across all the regions as shown above with the strongest growth in the Asia Pacific region in the most recent quarter.

Advertising revenue growth has three key factors. The total audience size (3.35bn daily), the number of ad impressions (ads displayed to the audience) and the price per ad. Meta has seen impressive growth in all of these, esp the latter which indicates their pricing power.

In Q4, the total number of ad impressions served across our services increased 6% and the average price per ad increased 14%. Impression growth was mainly driven by Asia-Pacific. Pricing growth benefited from increased advertiser demand, in part driven by improved ad performance. This was partially offset by impression growth, particularly from lower-monetizing regions and surfaces.

The FOA business has high margins and is very profitable.

Family of Apps operating income was $28.3bn, representing a 60% operating margin.

However the overall operating margin is 48%. This lower number reflect the losses in Reality Labs which is the Metaverse Division. In other words, Meta would a much more profitable and cash generative business if they did not have the Metaverse investments in Reality Labs.

Within our Reality Labs segment, Q4 revenue was $1.1bn, driven by hardware sales and up 1% (y/y). Reality Labs expenses were $6bn, up 6% (y/y), driven primarily by higher infrastructure costs and employee compensation, partially offset by lower restructuring costs. Reality Labs operating loss was $5bn.

The audience is growing and its period of engagement with the FOA is growing.

In Q4, global video time grew at double-digit percentages (y/y) on Instagram, and we’re seeing particular strength in the U.S. on Facebook, where video time spent was also up double-digit rates (y/y).

We see continued opportunities to drive video growth in 2025 through ongoing optimizations to our ranking systems. We’re also making several product bets that are focused on setting up our platforms for longer-term success. Creators are one of our central focuses. On Instagram, we continue to prioritize original posts in recommendations to help smaller creators get discovered. We also want to ensure creators have a place to experiment with their content, so we introduced a new feature in Q4 that allows creators to first share a Reel with people who don’t follow them.

Reels are already re-shared over 4.5bn times a day, and we’ve been introducing more features that bring together the social and entertainment aspects of Instagram.

They are working on monetization efficiency. This is defined as maximising revenue generation from user engagement while optimizing ad inventory and maintaining user experience ( i.e not bombarding the user with to many and /or irrelevant ads).

The first part of this work is optimizing the level of ads within organic engagement. We continue to grow supply on lower monetizing surfaces, like video, while optimizing ad supply on each of our surfaces to deliver ads at the time and place they will be most relevant to people. For example, we are continuing to better personalize when ads show up, including the optimal locations in the depth of someone’s feed, to introduce ad supply when it’s most optimal for the user and revenue. This is enabling efficient supply growth.

Some apps such as Threads do not have adverts at the moment but they are going to start.

Longer term, we also see impression growth opportunities on unmonetized surfaces like Threads, which we are beginning to test ads on this quarter. We expect the introduction of ads on Threads will be gradual and don’t anticipate it being a meaningful driver of overall impression or revenue growth in 2025.

The second part of increasing monetization efficiency is improving marketing performance. The ads must be better marketed to the user to improve conversion. This is done by ad ranking systems and improvements to ad ranking systems enables better targeting and higher conversion rates.

The ongoing enhancements to our ads ranking systems are an important driver of this work. In the second-half of 2024, we introduced an innovative new machine learning system in partnership with Nvidia, called Andromeda. This more efficient system enabled a 10,000 times increase in the complexity of models we use for ads retrieval, which is the part of the ranking process where we narrow down a pool of tens of millions of ads to the few thousand we consider showing someone. The increase in model complexity is enabling us to run far more sophisticated prediction models to better personalize, which ads we show someone.

This has driven an 8% increase in the quality of ads that people see on objectives we’ve tested. Andromeda’s ability to efficiently process larger volumes of ads also positions us well for the future as advertisers use our generative AI tools to create and test more ads.

They fully intend to continue to invest in Infrastructure and AI.

Our primary focus remains investing capital back into the business, with infrastructure and talent being our top priorities

We expect compute will be central to many of the opportunities we’re pursuing as we advance the capabilities of Llama, drive increased usage of generative AI products and features across our platform, and fuel core ads and organic engagement initiatives.

We’re working to meet the growing capacity needs for these services by both scaling our infrastructure footprint and increasing the efficiency of our workloads.

Although they will continue to be big buyers of Nvidia GPUs, they are also looking to deploy their own in-house built silicon called MTIA.

We’re pursuing cost efficiencies by deploying our custom MTIA silicon in areas where we can achieve a lower cost of compute by optimizing the chip to our unique workloads. In 2024 we started deploying MTIA to our ranking and recommendation inference workloads for ads and organic content. We expect to further ramp adoption of MTIA for these use cases throughout 2025 before extending our custom silicon efforts to training workloads for ranking and recommendations next year.

We started adopting MTIA in the first half of 2024 for core ranking and recommendations inference. We'll continue ramping adoption for those workloads over the course of 2025 as we use it for both incremental capacity and to replace some GPU-based servers when they reach the end of their useful lives. Next year, we're hoping to expand MTIA to support some of our core AI training workloads and over time, some of our Gen AI use cases.

They are actively using AI to improve their own efficiency.

We’re supporting this by building tools to help our engineering base be more productive. As part of our efficiency focus over the past two years, we’ve made significant improvements in our internal processes and developer tools and introduced new tools like our AI-powered coding assistant, which is helping our engineers write code more quickly. The continuous advancements in Llama’s coding capabilities will provide even greater leverage to our engineers, and we are focused on expanding its capabilities to not only assist our engineers in writing and reviewing our code, but also to begin generating code changes to automate tool updates and improve the quality of our code base.

On the approach to AI

Our goal is to advance AI research and advance our own development internally. And I think it's just going to be a very profound thing. That's something that I think will show up through making our products better over time. But -- and then as that works, there will potentially be a market opportunity down the road. This year like an AI engineer that is extremely widely deployed, changing all of development.

There has been a change in emphasis in terms of where the compute infrastructure required for LLMs will be utilised. Earlier, the bulk of the use was in training models but as the nature of the models has changed to reasoning models, more the compute infrastructure has to be devoted to inference.

There's already sort of a debate around how much of the compute infrastructure that we're using is going to go towards pretraining versus as you get more of these reasoning time models or reasoning models where you get more of the intelligence by putting more of the compute into inference, whether just will mix shift how we use our compute infrastructure towards that.

That was already something that I think a lot of the other labs and ourselves were starting to think more about and already seemed pretty likely even before this, that -- like of all the compute that we're using, that the largest pieces aren't necessarily going to go towards pre-training.

That doesn't mean that you need less compute. A new property that's emerged is the ability to apply more compute at inference time in order to generate a higher level of intelligence and a higher quality of service, which means that as a company that has a strong business model to support this, I think that's generally an advantage that we're now going to be able to provide a higher quality of service than others, who don't necessarily have the business model to support it on a sustainable basis.

Having AI products and services that billions of people can use requires huge resources and only the largest companies will be able to make the required investments. Meta is gambling the ability to scale investments will be a strategic advantage which will ensure they will be among the winners in the AI race.

We're implementing AI into all the feeds and ad products and things like that, we're just serving billions of people, which is different from, okay, you start to pretrain a model, and that model is sort of agnostic to how many people are using it…it's going to be expensive for us to serve all of these people. Investing very heavily in CapEx and infra is going to be a strategic advantage over time. It's possible that we'll learn otherwise at some point, but I just think it's way too early to call that. And at this point, I would bet that the ability to build out that kind of infrastructure is going to be a major advantage for both the quality of the service and being able to serve the scale that we want to.

Their product development process is to build large scale user engagement first before thinking about monetisation and boosting revenues and cashflows. This suggests revenues from the investments currently being made will only flow after two or three or more years. The next 12 to 18 months is about scaling up the user base.

Our product development process, is we build these products. We try to scale them to reach usually 1bn people or more. once they're at scale that we really start focusing on monetisation

We typically don't really ramp these things up or see them as meaningfully contributing to the business until we reach quite a big scale. So the thing that I think is going to be meaningful this year is the kind of getting of the AI product to scale. Last year was sort of the introduction and starting to get to be used. This year my kind of expectation and hope is that we will be at a sufficient scale and have sufficient kind of flywheel of people using it and improvement from that, that this will have a durable advantage.

We are going to be taking the AI methods and applying them to advertising and recommendations and feeds and things like that. So the actual business opportunity for Meta AI and AI Studio and business agents and people interacting with these AIs remains beyond 2025 for the most part.

If investors are worried that revenues seem very distant, the company is essentially saying “Trust us, we have done it before.”

But nonetheless, we've run a process like these many times. We built a product. We make it good. We scale it to be large. We build out the business around it. That's what we do. I'm very optimistic, but it's going to take some time.

Summary

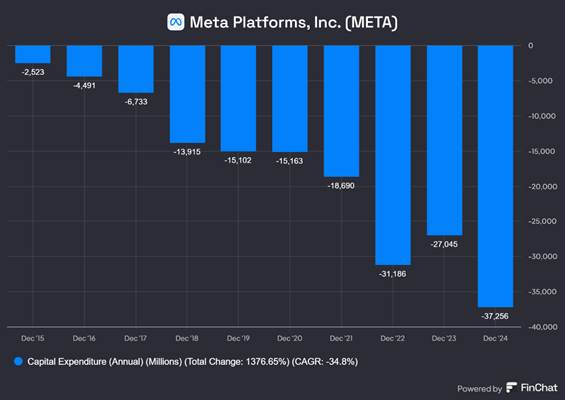

Meta has a huge number of daily and monthly users who use one of its Family of Apps (FOA). The number of daily users has hit 3.3bn, a remarkable 50% of the global population. The company has a highly profitable, cash flow generative business selling advertising to this large audience. The ARPP has risen to $14 per quarter. This gives them a quarterly revenue run rate of about $46bn ($14 * 3.35bn). They have plenty of scope to grow this as some apps like WhatsApp have hardly been monetised.

It could just carry on doing this and be a very successful company. However, that is not the company’s DNA. They always seek to invest heavily in new innovations which have little prospect of short-term revenues but could become huge sources of revenues eventually.

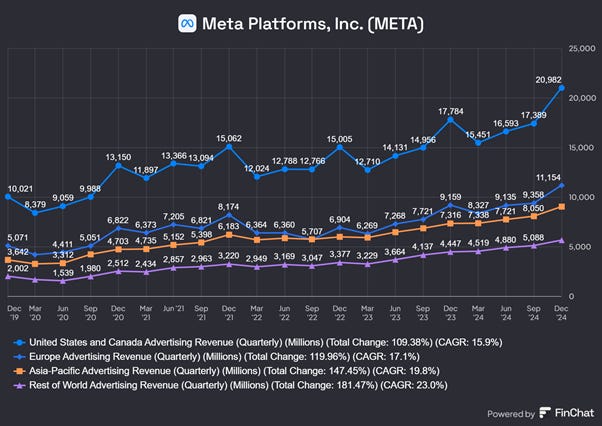

The company has spent billion of dollars in the last few years investing in the “metaverse” and that division (Reality Labs) is losing an annualised $20bn a year. This is serious money even for a company with the scale of Meta. In the last few years, the spending on the metaverse has been dwarfed by AI spending. This can be seen in Meta’s growing capital expenditure as can be seen in the graph below:

It is shown as a negative bar as it is an outflow of cash. Capital expenditure was $37bn in 2024 and is set to be $65bn in 2025.

This is a very large number and a significant proportion of the company’s operating cash flow as shown below:

In 2024, Meta’s operating cash flow was about $91bn so the 2025 proposed capex of $65bn, is a significant percentage of that.

Meta and their investors are taking a gamble that these investments will translate into huge and growing revenues and cash flows in the future.

The problem is other large companies such as Microsoft, Google, Amazon and Oracle are also making similar large bets. In addition, Chinese companies such as BABA and others are also committing significant capital. These companies offer cloud-hosting services and can host AI workloads to generate revenues.

Meta does not have a cloud hosting business and therefore it revenues from AI will come for the improvements that AI will enable in their advertising business. In Reality Labs, the current key revenue opportunity comes from the sales of VR headsets and the Meta Rayban Smart Glasses. Other revenue opportunities may be identified in the future.

An investment in Meta stock is a gamble these huge investments will unlock significant growth in revenues and cash flows to generate high returns. The investment outlays are very large and the extent and timing of returns are uncertain.

Valuation

At the current share price of $668 per share, the stock is trading at a two-year forward P/E ratio of 23.4X which is a reasonable level for a company with a ROCE of +28% and a likely earnings growth of 5% to 13% per annum in the next two years. Markets are perhaps already pricing in some of the uncertainty noted above.

Conclusions

Huge amounts of capital are being invested in AI infrastructure by a number of companies. There is a risk that if revenues from AI prove to be elusive, insufficient or too far in the future, much of these investment will have to be written off.

In January we reduced our portfolio allocation to stocks significantly due to worries about valuations rising bond yields and the likely inflationary effects of the tariff policies of the Trump administration. One of the stocks we sold out was Meta reducing the percentage allocation from 6% to 1.5%.

The stock rallied on these Q4 results and is now trading 10% higher than the price we sold it at!. This suggests our decision to sell was a mistake. We are not worried. We took significant profits based on the information available in mid-January 2025. These Q4 results released since then, show the underlying business continues to be strong and seem to have the scope to grow profitably even without benefits of AI.

We will be watching the stock carefully and will look to buy back into it if it falls significantly (say 15%) below current levels.