Nvidia Inc (NVDA)

Q3 2024 Results

We first covered Nvidia (NVDA) on 30th May 2023 in an “initiating coverage” type report. That report can be found here.

We did follow-up reports some of which can be found here, here, and here.

The work on these reports convinced us about investment case for Nvidia stock.

We allocated 2-3% of our portfolio to the stock and it rapidly become nearly 9% of the portfolio. The stock reached about $131 per share when reduced the holding down to 3%. For a while it looked like a good move as the stock peaked two days after the sale and then fell back to about $103. We did not add to our position at the lower level and the stock is now up at $145. Sometimes, when you find a really special company it really pays to just hold on and, perhaps, buy more in significant dips.

The share price has done very well and shareholders in Nvidia have a lot to be thankful for.

Q3 Results

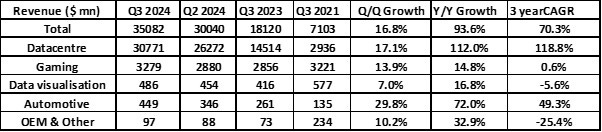

Take a good look at the numbers above. No matter how long your investing career, you may never see such strong results from a large cap company.

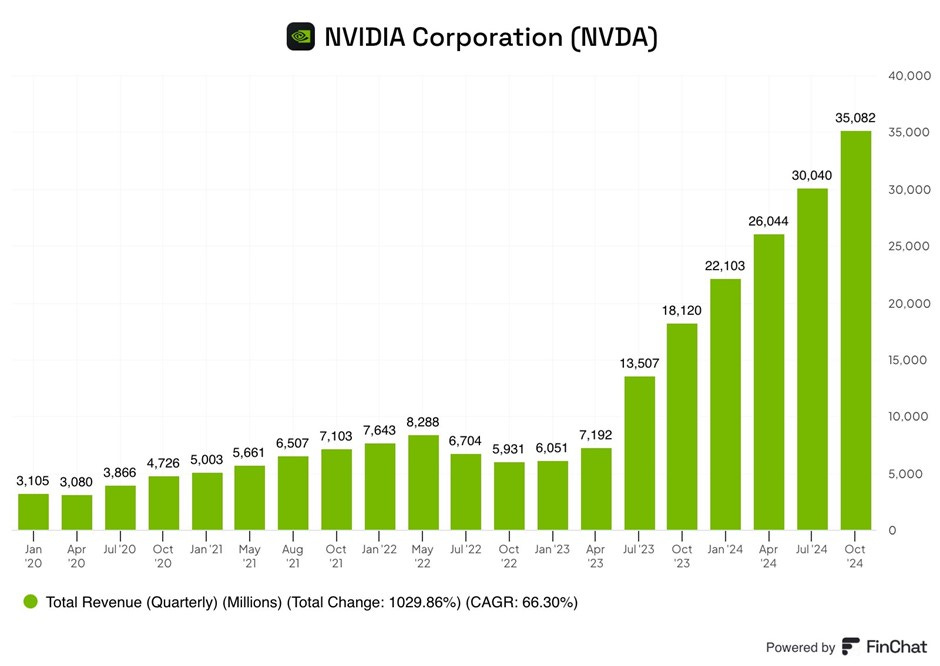

Nvidia itself may be able to repeat them for two to three more quarters but eventually the law of large numbers will apply as the comparison will become more difficult. The growth rate will slow but may still be at a very impressive level

Nvidia reported a surge in third-quarter profit and sales as demand for its specialized computer chips that power artificial intelligence systems remains robust.

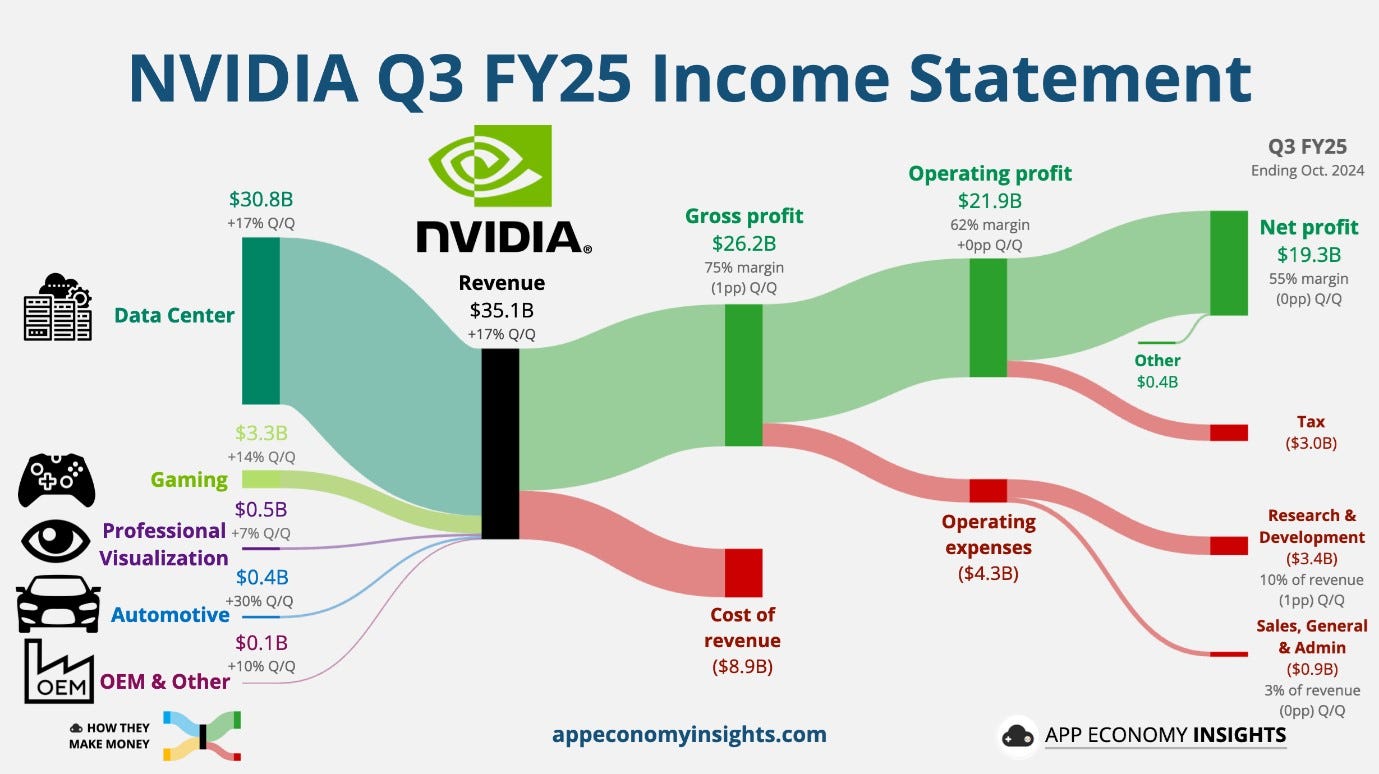

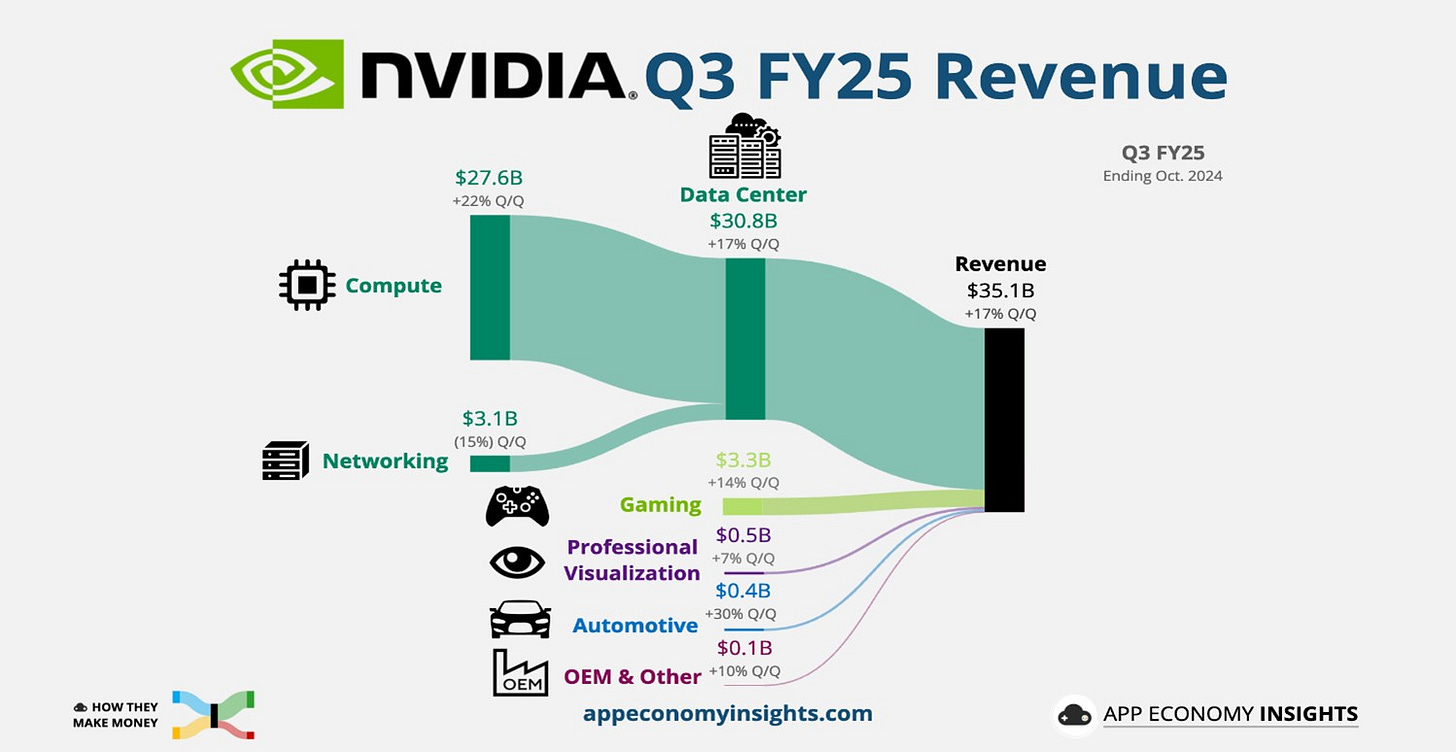

For Q3 2024, Nvidia posted revenue of $35.1bn up 94% from $18.1bn a year ago vs. expectations of $33.1bn.

Operating profit was $21.8bn, up 110%

Net profit was $19.3bn up 109%.

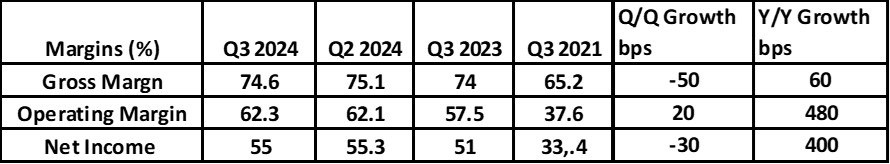

Margins are higher on a y/y basis- Operating margins and Net Profit margins were up 480 bps and 400 bps respectively, on a y/y basis.

The net profit in Q3 2024 ($19.3bn) is higher than revenue in the same quarter last year ($18.1bn). This is a testament to the strong revenue growth (93.6%) and y/y growth (400bp) to already high net profit margins.

Datacentre revenue was $30.7bn and up 112% as demand for AI capable data centre continues at full pace. This is not a surprise as the annual rate of capital expenditure by the three large cloud hyperscalers plus Meta and Oracle is at $ 200bn and set to rise to $220bn next year.

Within this Datacentre number of $ 30bn, Compute accounts for $27bn while networking is $3bn. Compute grew at 22% (q/q) and Networking grew at 15% (q/q).

The Networking business though small is of some interest to us.

Nvidia entered this business because of their acquisition of Mellanox. It is a competitor of Arista Networks (ANET), a top 5 holding in our portfolio.

We recently reported on ANET’s quarterly results and this report can be found here. ANET’s quarterly revenue was $1.8bn and grew at 20%. This suggests it is smaller than Nvidia’s networking division but it is growing faster.

Nvidia’s Gaming revenues at $ 3.3bn are quite large in absolute terms but they only grew at 15% (y/y)

Automotive Revenues grew 72% but at current levels ($449mn in Q3) are too small in absolute terms to move the needle. However, in the longer term, Automotive could be a meaningful source of future growth for NVDA.

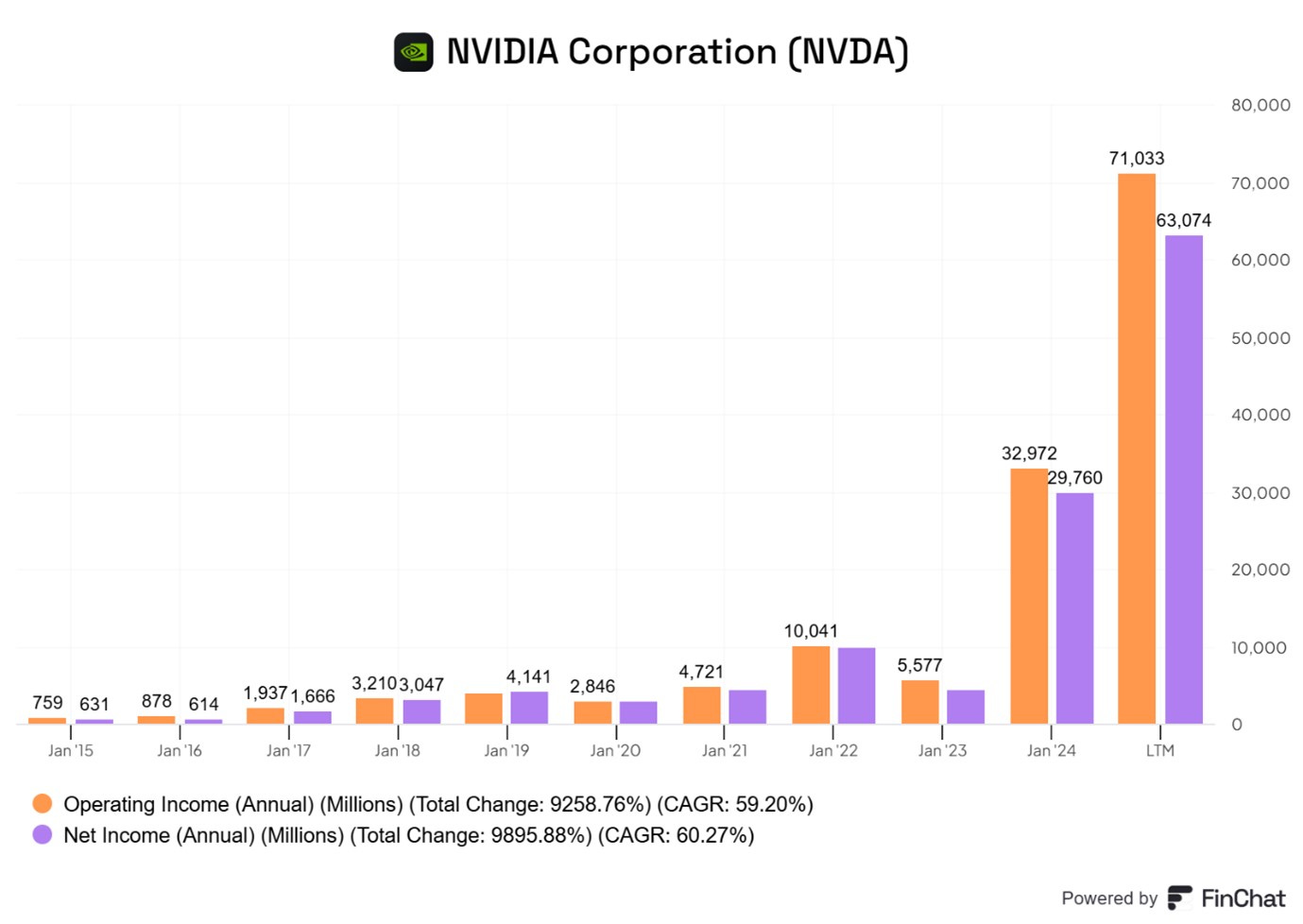

The chart below shows the remarkable growth in both operating profit and net profit in the last two years.

Net profit in the last ten years has grown at a CAGR of 60.3% while EPS has grown by 58.6%. This suggests a small 2.0% per annum dilution due to new stock being issued perhaps due to net stock issued due to Stock-based compensation.

Cash flows have jumped sharply and as Nvidia’s capex is quite low, Operating Cash Flow is almost the same as Free Cash Flow.

The company has started to use a large part of the greatly increased Free Cash Flow (FCF) to buy back shares. In the current year, the have generated FCF of $56.4bn and bought back shares of $34bn. The latter is shown as a negative bar in the chart above as it is an outflow of cash.

If this scale of share purchases can be maintained, the company can reverse the dilution noted above. In this scenario, we would see EPS grow at a faster rate than Net Profit.

The recent share repurchases have started to contribute to slight reduction in the total number of shares outstanding.

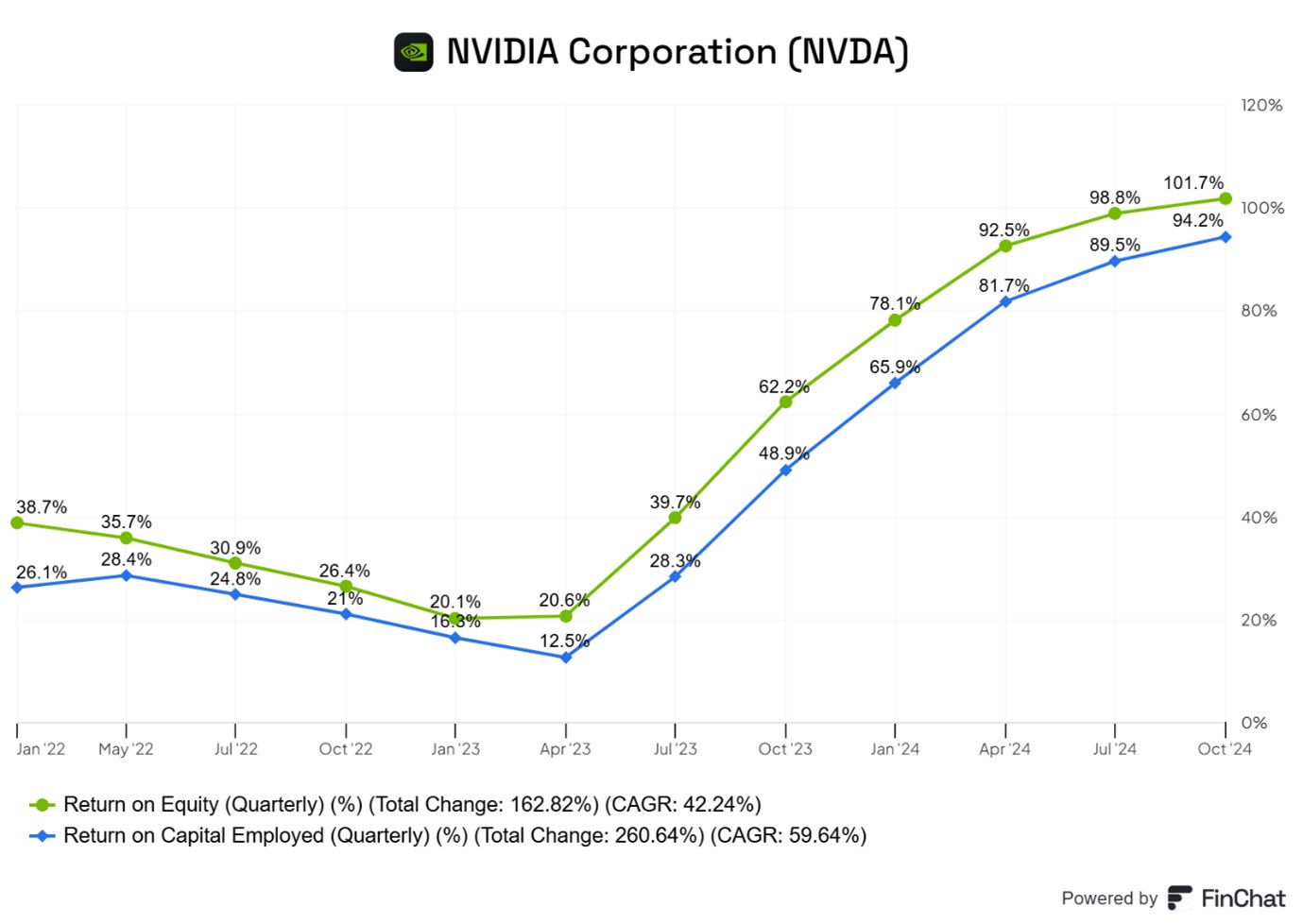

Profitability as measured by ROE and ROCE have increased very sharply in the last two or three years. ROE at the end of Q3 was 101%.

Highlights of the Earnings Conference Call

Demand for the Hopper (H series) Chips has been very strong

NVIDIA Hopper demand is exceptional and sequentially, NVIDIA H200 sales increased significantly to double-digit billions, the fastest product ramp in our company's history.

The H200 delivers up to 2 times faster inference performance and up to 50% improved TCO.

Cloud service providers were approximately half of our data center sales with revenue increasing more than 2 times year-on-year.

The Cloud Hyperscalers or Cloud Service Providers (CSPs) have been big buyers

“CSPs deployed NVIDIA H200 infrastructure and high-speed networking with installations scaling to tens of thousands of GPUs to grow their business and serve rapidly rising demand for AI training and inference workloads.”

“NVIDIA H200-powered cloud instances are now available from AWS, CoreWeave, and Microsoft Azure with Google Cloud and OCI (Oracle) coming soon.”

Demand growth is not just coming from CSPs.

“Alongside significant growth from our large CSPs, NVIDIA GPU regional cloud revenue jumped 2 times y/y as North America, EMEA, and Asia Pacific regions ramped NVIDIA cloud instances and sovereign cloud buildout.”

Consumer Internet companies have also been big buyers. Meta and Xai (linked to Twitter) have both talked about having created 100,000 (Nvidia) GPU datacentres in the last three months.

“Consumer Internet revenue more than doubled (y/y) as companies scaled their NVIDIA Hopper infrastructure to support next-generation AI models, training, multimodal and agentic AI, deep learning recommender engines, and generative AI inference and content creation workloads.”

The main focus is on investment for training of large language models (LLMs) such as Meta’s Llama. The Inference aspect of LLMs also generates demand for Nvidia chips. For Inference, customers can buy less advanced/older chips such as Ampere

“NVIDIA's Ampere and Hopper infrastructures are fuelling inference revenue growth for customers. NVIDIA is the largest inference platform in the world. Our large installed base and rich software ecosystem encourage developers to optimize for NVIDIA and deliver continued performance and TCL improvements.”

“We are the largest inference platform in the world today because our installed base is so large and everything that was trained on Amperes and Hoppers inferences incredibly on Amperes and Hoppers. And as we move to Blackwells for training foundation models, it leads behind it a large installed base of extraordinary infrastructure for inference. And so we're seeing inference demand go up. We're seeing inference time scaling go up.”

One aspect that gets less attention is how Nvidia uses software improvements to boost the performance of old chips.

“Rapid advancements in NVIDIA's software algorithms boosted Hopper inference throughput by an incredible 5 times in one year and cut time to first token by 5 times.”

“Our upcoming release of NVIDIA NIM will boost Hopper Inference performance by an additional 2.4 times. “

Continuous performance optimizations are a hallmark of NVIDIA and drive increasingly economic returns for the entire NVIDIA installed base.

Source: Liberty PF Substack

NVDA keep optimizing the existing hardware/software stack.

The continuous improvement makes NVDA very hard to compete with because even if you could match them at a point in time — usually with a slower chip that is cheaper, so that price/performance is similar to Nvidia’s— you still have to keep improving your own stack to keep up with these kinds of ongoing optimizations that make the total cost of ownership (TCO) lower over time.

Prior to the release of these earning numbers, there were rumours that there were overheating problems with Blackwell. The fear was this new most advanced chip would be delayed again. In the conference call, Nvidia put this fear to rest.

“Blackwell is in full production after a successfully executed mass change. We shipped 13,000 GPU samples to customers in the third quarter, including one of the first Blackwell DGX engineering samples to OpenAI.”

“Every customer is racing to be the first to market. Blackwell is now in the hands of all of our major partners, and they are working to bring up their Datacentres. We are integrating Blackwell systems into the diverse Data Center configurations of our customers.”

In a talk at the Nvidia AI summit Japan, Jensen Huang, Nvdia CEO describes Blackwell — the whole system, not just the GPU chip — it’s incredibly impressive and can be seen from 18 minutes 32 Seconds.

“Blackwell is a full stack, full infrastructure, AI data center scale system with customizable configurations needed to address a diverse and growing AI market from x86 to ARM, training to inferencing GPUs, InfiniBand to Ethernet switches, and NVLINK and from liquid-cooled to air-cooled.”

“Blackwell demand is staggering, and we are racing to scale supply to meet the incredible demand customers are placing on us. Customers are gearing up to deploy Blackwell at scale.”

“We are working right now on the quarter that we're in and building what we need to ship in terms of Blackwell. We have every supplier on the planet working seamlessly with us to do that. And once we get to next quarter, we'll help you understand in terms of that ramp that we'll see to the next quarter and after that.”

“Oracle announced the world's first Zettascale AI Cloud computing clusters that can scale to over 131,000 Blackwell GPUs to help enterprises train and deploy some of the most demanding next-generation AI models. Yesterday, Microsoft announced they will be the first CSP to offer in private preview Blackwell-based cloud instances powered by NVIDIA GB200, and Quantum InfiniBand.”

The New Blackwell Chips are much more advanced and complex than the predecessor Hopper which is the current gold standard ad dominant product.

Various independent organisations are testing the Blackwell samples they have been supplied with.

“Last week, Blackwell made its debut on the most recent round of MLPerf Training results, sweeping the per GPU benchmarks and delivering a 2.2 times leap in performance over Hopper.”

“The results also demonstrate our relentless pursuit to drive down the cost of compute. Just 64 Blackwell GPUs are required to run the GPT-3 benchmark compared to 256 H100s or a 4 times reduction in cost.”

“NVIDIA Blackwell architecture with NVLINK Switch enables up to 30 times faster inference performance and a new level of inference scaling throughput and response time that is excellent for running new reasoning inference applications like OpenAI's o1 model.”

“Blackwell production is in full steam. …we will deliver this quarter more Blackwells than we had previously estimated. And so, the supply chain team is doing an incredible job working with our supply partners to increase Blackwell, and we're going to continue to work hard to increase Blackwell through next year. Demand exceeds our supply and that's expected as we're in the beginnings of this generative AI revolution as we all know.”

“And we're at the beginning of a new generation of foundation models that are able to do reasoning and able to do long thinking and of course, one of the really exciting areas is physical AI, AI that now understands the structure of the physical world. And so, Blackwell demand is very strong. Our execution is on - is going well.”

Demand for Nvidia does not just come from large cap tech companies. There are now lots of AI start-ups.

“With every new platform shift, a wave of start-ups is created. Hundreds of AI native companies are already delivering AI services with great success. Though Google, Meta, Microsoft, and OpenAI are the headliners and Anthropic, Perplexity, Mistral, Adobe Firefly, Runway, Midjourney, Lightricks, Harvey, Codeium, Cursor, and Bridge are seeing great success, while thousands of AI-native startups are building new services.”

“The next wave of AI are Enterprise AI and Industrial AI.”

Enterprise AI

Nvidia is a B2B company. They provide enabling technology and products which their customers can use to process workloads to create AI agents for their final clients. AI Agents are essentially AI employees and like other employees, they must be trained, tested and guard railed before they can be deployed.

CEO Huang talks about these AI workers from about 25 minutes in the YouTube video cited above.

Many companies, large and small, are now racing to build AI Agents for enterprises using the Nvidia AI Enterprise Suite.

“Enterprise AI is in full throttle. NVIDIA AI Enterprise, which includes NVIDIA NeMo and NIM microservices is an operating platform of agentic AI.”

“Industry leaders are using NVIDIA AI to build Co-Pilots and agents. Working with NVIDIA, Cadence, Cloudera, Cohesity, NetApp, Nutanix, Salesforce, SAP and ServiceNow are racing to accelerate development of these applications with the potential for billions of agents to be deployed in the coming years. Consulting leaders like Accenture and Deloitte are taking NVIDIA AI to the world's enterprises.”

“Accenture launched a new business group with 30,000 professionals trained on NVIDIA AI technology to help facilitate this global build-out. Additionally, Accenture with over 770,000 employees is leveraging NVIDIA-powered Agentic AI applications internally, including in one case that cuts manual steps in marketing campaigns by 25% to 35%.”

“Nearly 1,000 companies are using NVIDIA NIM, and the speed of its uptake is evident in NVIDIA AI Enterprise monetization.”

“We expect NVIDIA AI Enterprise full year revenue to increase over 2 times from last year and our pipeline continues to build.”

Overall, our software, service, and support revenue is annualizing at $1.5 billion, and we expect to exit this year annualizing at over $2 billion. Industrial AI and robotics are accelerating. This is triggered by breakthroughs in physical AI, foundation models that understand the physical world.

In addition to Enterprise demand for AI agents, Industrial Companies are looking to deploy AI and robotics

“We built NVIDIA Omniverse for developers to build, train, and operate industrial AI and robotics. Some of the largest industrial manufacturers in the world are adopting NVIDIA Omniverse to accelerate their businesses, automate their workflows, and to achieve new levels of operating efficiency.”

“Foxconn, the world's largest electronics manufacturer is using digital twins and industrial AI built on NVIDIA Omniverse to speed the bring up of its Blackwells factories and drive new levels of efficiency. In its Mexico facility alone, Foxconn expects to reduce - a reduction of over 30% in annual kilowatt-hour usage.”

China

In the last two quarter the only disappointing element in NVDA’s numbers has been demand in China as NVDA has not been able to sell the most advanced chips and products there due to US government mandated restrictions.

“From a geographic perspective, our Datacentre revenue in China grew sequentially due to shipments of export-compliant copper products to industries. As a percentage of total Datacentre revenue, it remains well below levels prior to the onset of export controls. We expect the market in China to remain very competitive going forward.”

Sovereign AI

Some countries want to have AI datacentres on their soil so they can ensure data privacy, Often, they want to develop local language models using local infrastructure. This market segment, which is appealing to countries in Middle East and Asia, is known as Sovereign AI.

“Our Sovereign AI and our pipeline going forward is still absolutely intact as those are working to build these foundational models in their own language, in their own culture, and working in terms of the enterprises within those countries.”

“Our sovereign AI initiatives continue to gather momentum as countries embrace NVIDIA accelerated computing for a new industrial revolution powered by AI. India's leading CSPs include Tata Communications and Yotta Data Services are building AI factories for tens of thousands of NVIDIA GPUs. By year-end, they will have boosted NVIDIA GPU deployments in the country by nearly 10 times. Infosys, TCS, Wipro are adopting NVIDIA AI Enterprise and upskilling nearly 0.5 million developers and consultants to help clients build and run AI agents on our platform.”

Japan demand

CEO Jensen Huang had just come back from an AI Summit in Japan. Customer demand from Japan was at the front of his mind.

“In Japan, SoftBank is building the nation's most powerful AI supercomputer with NVIDIA DGX Blackwell and Quantum InfiniBand. SoftBank is also partnering with NVIDIA to transform the telecommunications network into a distributed AI network with NVIDIA AI Aerial and AI-RAN platform that can process both 5G RAN on AI on CUDA. Leaders across Japan, including Fujitsu, NEC and NTT are adopting NVIDIA AI Enterprise and major consulting companies, including EY, Strategy, and Consulting will help bring NVIDIA AI technology to Japan's industries.”

Networking

Nvidia entered the Networking business as a result of their acquisition of Mellanox. It is a competitor of Arista Networks (ANET) which is also a top 5 holding in our portfolio.

“Networking revenue increased 20% year-on-year…The networking revenue was sequentially down; networking demand is strong and growing and we intend -- anticipate sequential growth in Q4. CSPs and supercomputing centres are using and adopting the NVIDIA InfiniBand platform to power new H200 clusters.”

“The growth year-over-year is tremendous and our focus since the beginning of our acquisition of Mellanox has really been about building together the work that we do in terms of -- in the Data Center. The networking is such a critical part of that. Our ability to sell our networking with many of our systems that we are doing in data center is continuing to grow and do quite well.”

NVDA seem to be making good progress in Networking. We need to monitor this as it may have negative implications for the shares of Arista Networks.

Gaming

In 1995, Nvidia main business was making GPU for Gamers. This remains a significant business with NVDA seeing good demand for its GeForce RTX chips

“Gaming revenue of $3.3 billion increased 14% sequentially and 15% year-on-year. Q3 was a great quarter for gaming with notebook, console, and desktop revenue, all growing sequentially and year-on-year.”

“RTX end-demand was fuelled by strong back-to-school sales as consumers continue to choose GeForce RTX GPUs and devices to power gaming, creative, and AI applications. Channel inventory remains healthy, and we are gearing up for the holiday season.”

“We began shipping new GeForce RTX AI PCs with up to 321 AI tops from ASUS and MSI with Microsoft's Copilot+ capabilities anticipated in Q4. These machines harness the power of RTX ray tracing and AI technologies to supercharge gaming, photo and video editing, image generation, and coding.”

Automotive

As cars become more technologically advanced and manufacturers are moving towards EVs and Autonomous driving, Nvdia products and services are seeing strong demand growth from auto manufacturers

“Revenue was a record $449 million, up 30% sequentially and up 72% y/y.. Strong growth was driven by self-driving ramps of NVIDIA Orin and robust end-market demand for NAVs. Volvo Cars has rolling out its fully electric SUV built on NVIDIA Orin and DriveOS.”

Has scaling up Data centres reached limits to its growth?

A week before the earnings numbers were released, there was some concern about an interview with Sam Altman of OpenAI where he argued there are limits to return to scaling up (with even larger datacentre capacity) when training LLMs.

CEO Huang was asked for his views on the debate about scaling. His response was he believes that benefits to scaling continue to prevail .

“A foundation model pre-training scaling is intact and it's continuing. As you know, this is an empirical law, not a fundamental physical law, but the evidence is that it continues to scale.”

“we've now discovered two other ways to scale. One is post-training scaling. Of course, the first generation of post-training was reinforcement learning human feedback, but now we have reinforcement learning AI feedback and all forms of synthetic data generated data that assists in post-training scaling.”

OpenAI’s release of the o1 or Strawberry model in September 2024 was notable. It is the first in a planned series of “reasoning” models that have been trained to answer more complex questions, faster than a human can. CEO Huang argues that this involves a new type of scaling called test time scaling.

“The longer it thinks, the better and higher-quality answer it produces, and it considers approaches like chain of thought and multi-path planning and all kinds of techniques necessary to reflect and so on and so forth and it's intuitively, it's a little bit like us doing thinking in our head before we answer a question.”

Therefore, in Huang’s view, there are three types of scaling and they will contribute to even greater demand for Nvdia Products and Services.

“..we now have three ways of scaling and we're seeing all three ways of scaling. And as a result of that, the demand for our infrastructure is really great.

“And so my expectation is that for the foreseeable future, we're going to be scaling pre-training, post-training as well as inference time scaling and which is the reason why I think we're going to need more and more compute and we're going to have to drive as hard as we can to keep increasing the performance by X factors at a time so that we can continue to drive down the cost and continue to increase their revenues and get the AI revolution going.”

“The computing scale of pre-training and post-training continues to grow exponentially. “

The Nvidia Ecosystem

Give the importance of Nvidia’s products, they are working with a lot of major companies.

“And so almost every company in the world seems to be involved in our supply chain. And we've got great partners, everybody from, of course, TSMC and Amphenol, the connector company, company, Vertiv and SK Hynix and Micron, Amkor and KYEC and there's Foxconn and the many factories that they've built and Quanta and Wiwynn and gosh, Dell and HP and Super Micro, Lenovo and the number of companies is just really quite incredible…”

Setting up new AI datacentres involves a lot of engineering.

“And there's obviously a lot of engineering that we're doing across the world. The engineering that we do with them is, as you know, rather complicated. And the reason for that is because although we build full stack and full infrastructure, we disaggregate all of the AI supercomputer and we integrate it into all of the custom datacentres in architectures around the world.”

“That integration process is something we've done several generations now. We're very good at it, but still, there's still a lot of engineering that happens at this point. But as you see from all of the systems that are being stood up, Blackwell is in great shape.”

Two Fundamental Shifts in Computing

At each earnings conference call, CEO Jensen Huang gives a fresh and very interesting perspective on the most recent and relevant technology trends. He did not disappoint on this occasion when he spoke about two fundamental shifts in Computing

“We are really at the beginnings of two fundamental shifts in computing

1. The first is moving from coding that runs on CPUs to machine learning that creates neural networks that runs on GPUs.

2. On the other hand, secondarily, on top of these systems are going to be -- we're going to be creating a new type of capability called AI.”

The first shift has happened to large extent

“that fundamental shift from coding to machine learning is widespread at this point. There are no companies who are not going to do machine learning. And so machine learning is also what enables generative AI. …a trillion dollars’ worth of computing systems and datacentres around the world is now being modernized for machine learning.”

The second change- the emergence of a new type of AI capability is described by Huang as an AI Factory.

“Second, the age of AI is in full steam. Generative AI is not just a new software capability, but a new industry with AI factories manufacturing digital intelligence, a new industrial revolution that can create a multi trillion-dollar AI industry.”

“They're generating something. Just like we generate electricity, we're now going to be generating AI. And if the number of customers is large, just as the number of consumers of electricity is large, these generators are going to be running 24/7.”

These new AI factories will serve new emerging AI-native companies. The latter will use the AI factories to create new AI services.

“These companies are being created because people see that there's a platform shift and there's a brand-new opportunity to do something completely new. And so, my sense is that we're going to continue to build out to modernize IT, modernize computing.”

“And they're going to create and generate out of their services, essentially intelligence. Some of it would be digital artist intelligence like Runway. Some of it would be basic intelligence, like OpenAI. Some of it would be legal intelligence like Harvey. Digital marketing intelligence like Reuters and so on.”

The CEO was asked whether the high current levels of demand will lead to “indigestion” among the customers.

“There will be no digestion issue until we modernize a trillion dollars with the datacentres. Those - if you look at the world's datacentres, the vast majority of it is built for a time when we wrote applications by hand and we ran them on CPUs. It's just not a sensible thing to do anymore. If you have -- if every company's CapEx, if they're ready to build a data center tomorrow, they ought to build it for a future of machine-learning and generative AI.”

“what's going to happen over the course of next X number of years, and let's assume that over the course of four years, the world's datacentres could be modernized as we grow into IT. As you know, IT continues to grow about 20%, 30% a year, let's say. And let's say by 2030, the world's datacentres for computing is, call it a couple of trillion dollars.”

Physical AI

Another aspect of the new AI factories out put will be Physical AI.

“And there's now a whole new era of AI if you will, a whole new genre of AI called physical AI, Physical AI understands the physical world and it understands the meaning of the structure and understands what's sensible and what's not and what could happen and what won't and not only does it understand but it can predict and roll out a short future.”

“That capability is incredibly valuable for industrial AI and robotics. And so that's fired up so many AI-native companies and robotics companies and physical AI companies that you're probably hearing about. And it's really the reason why we built Omniverse.”

NVIDIA Omniverse is a platform of code and services that enable developers to easily integrate OPEN USD (an ecosystem for describing, composing, simulating and collaborating with 3D Worlds) and NVIDIA RTX™ rendering technologies into software tools and simulation workflows for building AI systems.

“Omniverse is so that we can enable these AIs to be created and learn in Omniverse and learn from synthetic data generation and reinforcement learning physics feedback instead of human feedback is now physics feedback.”

More information on the Nvidia Omniverse can be found here.

Conclusion

“The age of AI is upon us and it's large and diverse. NVIDIA's expertise, scale, and ability to deliver full stack and full infrastructure let us serve the entire multi-trillion dollar AI and robotics opportunities ahead. From every hyperscale cloud, enterprise private cloud to sovereign regional AI clouds, on-prem to industrial edge and robotics.”

Summary

This was an extraordinarily strong performance by NVDA.

It achieved 94% growth in revenue and 110% growth in Net Proft and EPS.

Operating margins and Net Profit margins were up 480 bps and 400 bps respectively on a y/y basis

Net profit in Q3 2024 ($19.3bn) is higher than the revenue in the same quarter last year ($18.1bn)

Hopper, Ampere and other existing products continue to see strong demand.

The new Blackwell is a huge improvement on current products and is set to ramp up and is very likely to significantly boost revenues, profits and cash flows over the next few years.

Valuation

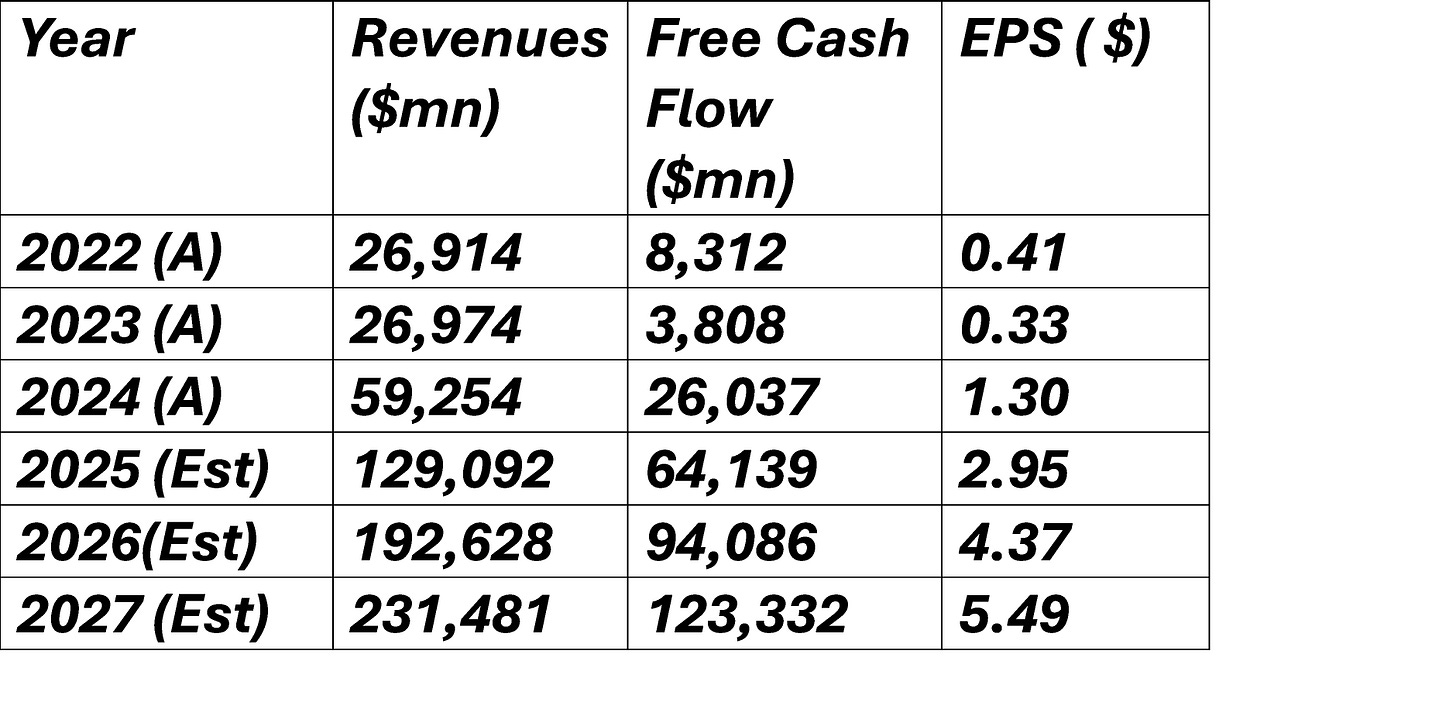

The consensus analysts’ forecasts for Revenues, Free Cash Flow and EPS are as follows:

At the current price of $141.95 per share, the NVDA stock is trading at a two-year forward P/E Ratio of 32X. At a current market capitalisation of $3,480bn, the two- year forward Price to Free Cash Flow ratio is 37X.

This implies a two-year forward Earnings yield of about 3.1% and a two-year forward Free Cash flow yield of 2.7%.

At first glance, a P/E of 32X looks cheap for a company which has an ROE of 100% and likely EPS growth of 25% plus for the next few years. This is surprising given the extent to which the share price has already appreciated.

We conducted a Discounted Cash Flow (DCF) Calculation.

We made the following assumptions. We are assuming a significant slowdown in the rate of Revenue and Free Cash Flow growth in FY 2028/2029.

We also assume a risk-free rate of 4.6% and a Market Risk premium of 3%. The Stock Beta is 1.7. These numbers result in a Weighted Average Cost of Capital (WACC) of 9.5%.

Finally, we assume that in FY 2029, the terminal exit multiple for EV/FCF is 32X.

With these assumptions, we calculated a theoretical NVDA stock value of $169.4 . this means the company is currently trading at a 20% discount to the implied theoretical value. This discount is much larger than when have seen for some time in companies we have been looking at.

If we increase the WACC to 10%, the implied share price declines to $165.2.

If the terminal exit multiple for EV/FCF is reduced to more conservative 28X, we get a theoretical value of $ 151.0.

Assuming EV/FCF ratio of 32X may look aggressive but may perhaps be justified for high growth company.

We assumed a significant slowdown in Revenue and Free Cash Flow growth in FY 2028/2029. The key risk to our forecast is we may be too conservative in the slowdown in the forward years (2028/2029) that we are projecting.

We should note that the problem with all such valuation models is they are highly dependent on the inputs. If one makes small changes in the assumptions and therefore in the inputs, there can be a wide range in the output.

Conclusions

Nvidia is a remarkable company which is currently in the middle of a remarkable phase of profitable growth. The share price has given a total return of 92% CAGR in the last five years and 76% CAGR for the last 10 years.

NVDA has inched ahead of Apple and the largest company in the world, and it accounts for 7% of the market capitalisation of S&P 500 Index

Can the share price continue to advance. Our own best estimate suggest the company could be worth as much as $ 170 per share- a further 20% to the current price.

After the results, many Wall Street analysts upgraded their price targets. Some of these are shown below. The highest is $200 and the lowest is $140.

1. Rosenblatt: $220 (from $200)

2. Baird: $195 (from $150)

3. Evercore ISI: $190 (from $189)

4. BofA Securities: $190

5. Jefferies: $185

6. Bernstein: $175 (from $155)

7. Citi: $175 (from $170)

8. Mizuho: $175 (from $165)

9. TD Cowen: $175 (from $165)

10. Wedbush: $175 (from $160)

11. Truist Securities: $169 (from $167)

12. Morgan Stanley: $168 (from $160)

13. Goldman Sachs: $165 (from $150)

14. Barclays: $160 (from $145)

15. Deutsche Bank: $140 (from $115)

Our own estimate is at the lower end of the range

We have a 3% allocation to Nvidia. In the light of these results, we are going to add an additional 1% allocation.

This is a difficult decision as we had sold at $131 and had not bought back at 104. However the company has continued to perform and the business has improved greatly. When the facts change, we should be willing to change our minds and not use past mistakes as reason for inaction. We may regret buying shares at this level but, as ever, time will tell.