Nvidia (NVDA)

Q1 2025 Quarterly Results

We first covered Nvidia (NVDA) on 30th May 2023. The report can be found here. We had some of follow-up reports some of which can be found here, here, and here.

We would like to (belatedly) revisit it again in the light of the most recent quarterly results. Nvidia is the best performing stock in our portfolio and is now the largest holding of about 10%.

Summary

In the last year, NVDA has ridden the GenAI wave impressively. 87% of its business now involves selling chips, software, networking, and other services to Datacentres. The share price has risen 5.5X since our original note a year ago.

Some people have made so much money in the stock that some are likely to buy cars from their profits like the one shown below!

We will start by defining some key terms which are important to know with respect to NVDA.

CPU: Central Processing Unit. The basic electronic circuit that carries out tasks/assignments and data processing from application in a computer. Most CPUs are based on X86 architecture which was developed by Intel. Intel and AMD were the leading CPU players during the PC revolution and remain the two most important suppliers.

GPU: Graphics Processing Unit. This is an electronic circuit used to process visual information and data. In the 1990s, there were numerous GPU players, but Nvdia has come to dominate, with AMD a distant second. Demand for, and technical developments in, GPUs were driven initially by gamers and more recently by the boom in Crypto mining. In the last 18 months, demand for GPUs has increased exponentially due to the rise of Generative AI and Large Language Models (LLMs). Nvidia has about ~87% market share for GPUs for Generative AI.

DGX: Nvidia’s full-stack platform combining its chipsets and software services.

Hopper: Nvidia’s modern GPU architecture iterations are name after famous scientists. Earlier lines were named Ampere and Volta. The current series, Hopper, is named after computer scientist, Grace Hopper.

H100: NVDA’s Hopper 100 Chip. (H200 is Hopper 200)

Ampere: The GPU architecture that Hopper replaced for a 16x performance boost.

Blackwell. The next iteration of the Nvidia GPU architecture after Hopper. It is named after American mathematician, David Blackwell.

Grace: Nvidia’s new CPU architecture designed for accelerated compute and GenAI. It is a key element in the DGX platform.

CUDA: Nvidia-designed software stack used with GPUs. CUDA is an important element in Nvidia’s competitive advantage and its economic moat. It is a computing and programme writing platform purpose-built for Nvidia GPUs.

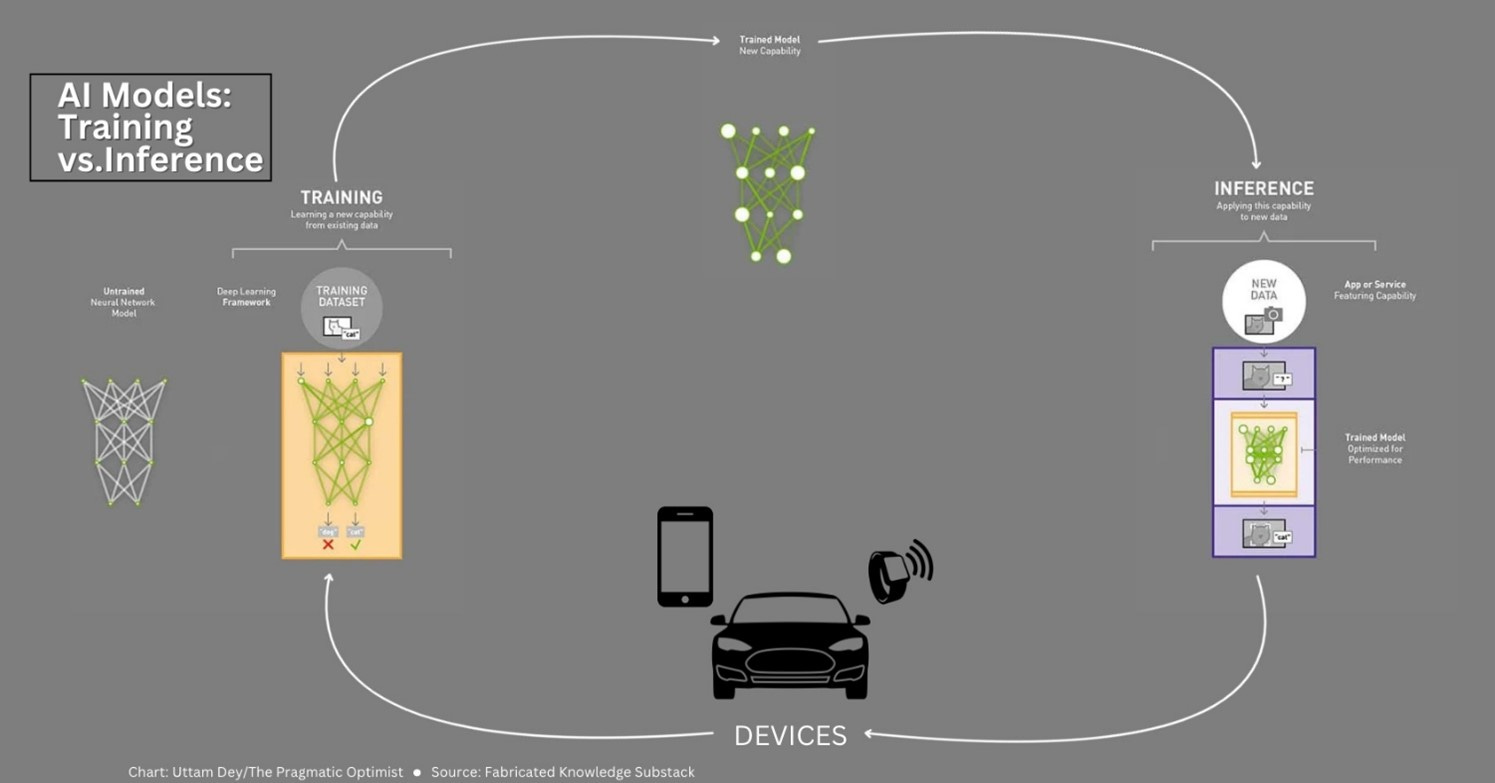

GenAI Model Training: There are two stages to the development of LLMs. Training and Inference. Training is an iterative process where model is fed huge amounts of data and is trained to learn. It is one key layers to model development.

GenAI Model Inference: Inference is the second key part of LLM development. It pushes trained models to create new insights and uncover new, related patterns.

CSP - Cloud Service Providers is a collective term for the cloud hosting companies such as Amazon, Microsoft and Google which are major historic purchasers of Nvidia GPUs

Detailed Results

In summary, these were excellent quarterly results due to huge demand for GPUs and related software services.

Despite being widely followed by many analysts, NVDA beat revenue estimates by 5.5% & beat guidance expectations by 8.3%.

Datacentre revenue estimates beat expectations by 6.6%.

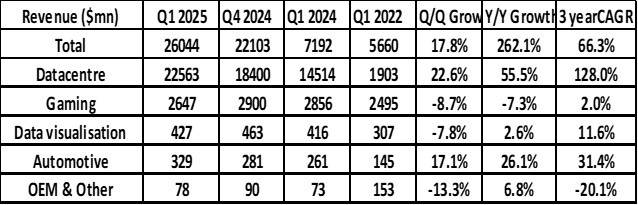

The Q1 2025 Revenue was over $ 26bn and it has grown 17.8% over the previous quarter and 262% (y/y). Datacentre revenues came in at $ 22.5bn and accounts for 87% of total revenues. This ratio was 33% three years ago. Automotive revenues are small at $ 329mn but they are also growing fast (31.4% CAGR over three years).

The chart above shown that the $ 26bn quarterly revenues are at 4-5 times the 2022 run rate. Nvidia’s 3-year revenue compounded annual growth rate (CAGR) was an impressive 66.3%.

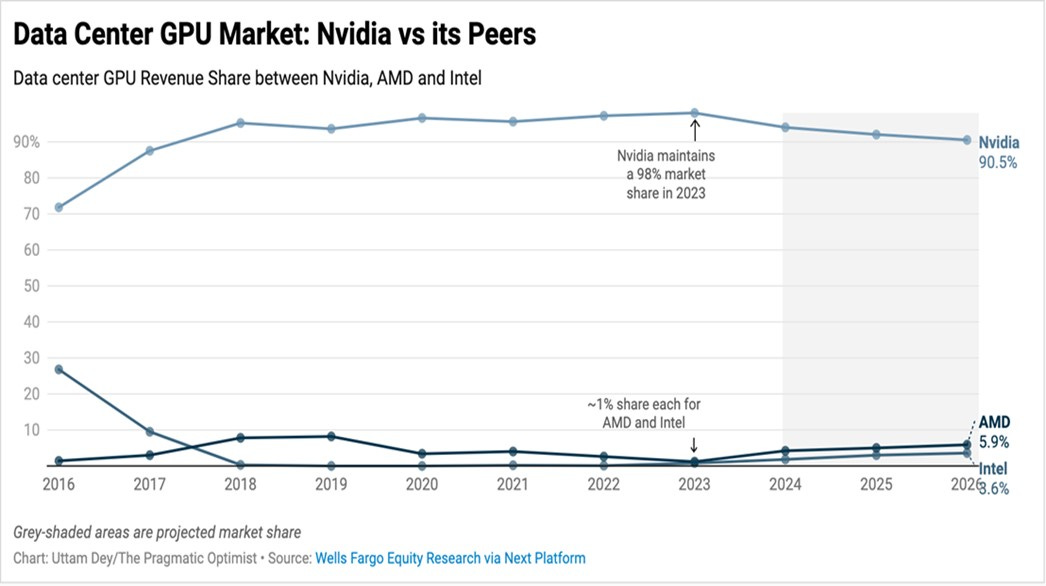

Nvidia has a dominant market share in Datacentre GPU, between 80% and 90%.

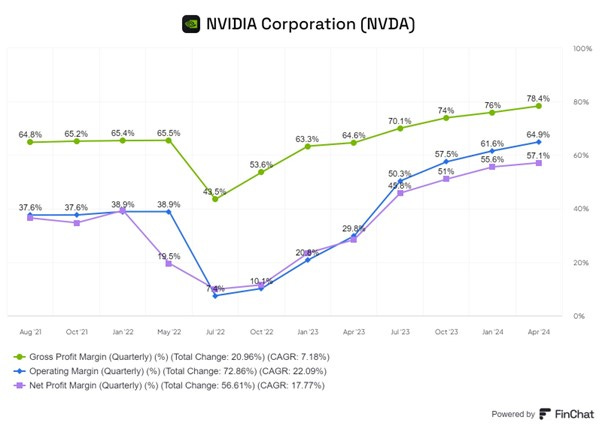

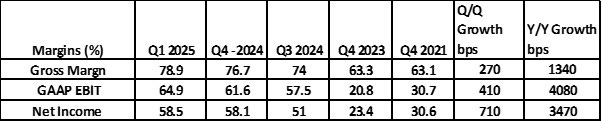

The table below shows that Operating Profit and Net Profit growth has consistently been higher than revenue growth. This indicates the high degree of operating leverage in the business.

Profit margins have grown impressively in the last two to three years. In the last quarter, margins beat expectations.

The Balance Sheet continues to get stronger. Nvidia has $31.4bn in cash & equivalents and just $ 8.5bn in long term debt.

Q2 Guidance

Nvidia expects the total revenue in the next quarter to be $28 billion. (q/q growth of 8% and y/y growth of 107%). This is 4.9% higher than analysts’ expectations.

They said they expect sequential growth in all market platforms. GAAP gross margins are expected to be 74.8%. For the full year, they expect gross margins to be in the mid-70s percent range.

Nvidia expects 40%-43% Y/Y Operating Expenditure growth. Given the fact analysts expect, 86% Y/Y revenue growth, the positive operating leverage is expected to continue.

Nvidia expects $6.21 EPS (q/q growth of 4.0%) which would be y/y growth of 248% (!).

This company guidance form the basis of analysts’ forecasts. Nvidia has consistently beat forecasts in recent quarters.

Highlights from the earnings Conference call

There were themes in the earnings call but we will focus on a few of them given the need to keep this note to a reasonable length.

Datacentre Demand:

These results were extraordinary reflecting the very high demand for Nvidia’s GenAI chips and infrastructure.

Nvidia has a ~90% market share in these key products due to its ability to supply high performing, cost efficient and leading-edge products and services.

Large CSPs are big buyers as they invest heavily in their cloud infrastructure to meet exploding client demand for GenAI training, inference, and computer infrastructure. Nvidia noted that CSPs account for 45% of their Datacentre revenue.

We should note the three large CSPs (Amazon, Microsoft, and Google) plus Meta Platforms, have announced plans for total capital expenditure of $ 200bn+ for the next two years. Most of this will be datacentre investment. This is clearly bullish for NVDA.

The economics of this investment are compelling.

“Training and inferencing AI on NVIDIA CUDA is driving meaningful acceleration in cloud rental revenue growth, delivering an immediate and strong return on cloud provider's investment. For every $1 spent on NVIDIA AI infrastructure, cloud providers have an opportunity to earn $5 in GPU instant hosting revenue over four years.”

This the payback period for their investment is about one year. This explains why the large CSPs are making, and will continue to make, large investments.

“For the final customer of the cloud hosting company, Nvidia GPUs offer:

· the best time to train models,

· the lowest cost to train models and

· the lowest cost to inference LLMs.

For public cloud providers, NVIDIA brings customers to their cloud, driving revenue growth and returns on their infrastructure investments.”

“The demand for GPUs in all the datacentres is incredible. We're racing every single day. And the reason for that is applications like ChatGPT and GPT-4o, and now it's going to be multi-modality and Gemini and its ramp and Anthropic and all of the work that's being done at all the CSPs are consuming every GPU that's out there.”

Demand is very strong and likely to remain so for the next two years.

Nvidia wide ranging technological prowess.

Nvidia has played a role in some of the key technological developments announced in the last quarter.

Tesla launched Version 12 of their Full Self-Driving (FSD) Software. H

“We supported Tesla's expansion of their training AI cluster to 35,000 H100 GPUs. Their use of NVIDIA AI infrastructure paved the way for the breakthrough performance of FSD Version 12, their latest autonomous driving software based on Vision.”

https://www.teslarati.com/tesla-fsd-supervised-v12-experiences

Meta announced their Largest LLM, Llama 3. This is how Mark Zuckerberg said about it in the Meta Conference call.

“The 8 billion and 70 billion parameter models that we released are best-in-class for their scale. The 400 plus billion parameter model (Llama 3) that we're still training seems on track to be industry leading on several benchmarks and I expect the models are just going to improve further from open-source contributions.”

Meta’s Llama 3 would not exist without Nvidia.

“Consumer Internet companies are also a strong growth vertical. Meta's announcement of Llama 3, their latest large language model, which was trained on a cluster of 24,000 H100 GPUs. Llama 3 powers Meta AI, a new AI assistant available on Facebook, Instagram, WhatsApp, and Messenger”

Xiaomi Is a leading Chinese mobile headset manufacturer. They announced the launch of a new EV which has been compared a to a Porsche but will retail for just $ 30,000.

“We supported Xiaomi in the successful launch of its first electric vehicle, the SU7 sedan built on the NVIDIA DRIVE Orin, our AI car computer for software-defined AV fleets.”

Exterior and Interior of Xiaomi SU7 Sedan which is built an on Nvidia Software.

Several large new AI companies have been funded by VCs in the last few months. Nvidia is working with most of them.

“Leading LLM companies such as OpenAI, Adept, Anthropic, Character.AI, Cohere, Databricks, DeepMind, Meta, Mistral, xAI, and many others are building on NVIDIA AI in the cloud.”

Sovereign AI:

Many countries want to build large LLM models within their shores. This is driven by a perceived strategic need for local AI expertise, a reluctance to give up national data to datacentres located abroad and a reaction to perceived cultural / linguistic threat from large US tech.

“Sovereign AI refers to a nation's capabilities to produce artificial intelligence using its own infrastructure, data, workforce, and business networks. Nations are building up domestic computing capacity through various models. Some are procuring and operating Sovereign AI clouds in collaboration with state-owned telecommunication providers or utilities.”

According to Nvidia management, working with Nvidia leadership, paves the way to an unmatched ability to train geo-specific models on a government’s own data. Italy, France, Singapore, and Japan were all mentioned by Nvidia as working on Sovereign AI projects.

“NVIDIA's ability to offer end-to-end compute to networking technologies, full-stack software, AI expertise, and rich ecosystem of partners and customers allows Sovereign AI and regional cloud providers to jumpstart their country's AI ambitions.”

“We believe Sovereign AI revenue can approach the high single-digit billions this year. The importance of AI has caught the attention of every nation.”

Nvidia’s full technology stack

Nvidia doesn’t just design chips. Its toolkit includes chips, servers, switches, networking, and cutting-edge software.

“Nvidia’s rich software stack and ecosystem and tight integration with cloud providers makes it easy for end customers up and running on Nvidia GPU instances in the public cloud.”

At the centre of this stack sits CUDA. CUDA makes using GPUs for general purpose computing convenient. It is the most advanced parallel computing platform.

CUDA has been tested by competition. AMD and Intel previously tried to come up with CUDA alternatives. They failed.

The decades-long lead for CUDA means it benefits from strong networking effects — developers like to learn and work on it as it is the most common platform. This in turn led to more users adopting it.

Other positive factors for CUDA are:

Nvidia’s relentless focus on expanding CUDA libraries.

Close collaborations with researchers and educational organizations to optimize popular models on CUDA.

Risk aversion of enterprise buyers makes them feel more comfortable with established technology.

CUDA’s platform versatility spanning datacentre, desktop workstation, laptop, and even embedded deployment.

CUDA is a powerful software layer that frees Nvidia to constantly optimize every part of its stack.

This quarter, H100 inference performance was boosted 3x. Nvidia can control every piece of its infrastructure and therefore deliver drives faster improvement than others.

“The fact that NVIDIA builds this system causes us to optimize all of our chips to work together as a system, to be able to have software that operates as a system, and to be able to optimize across the system.”

“if you had a $5 billion infrastructure and you improved the performance by a factor of two, which we routinely do, then you improve the infrastructure by a factor of two, the value(added) too is $5 billion.”

The key thing is that as you improve performance, you are lowering the total cost of ownership (TCO).

“We literally build the entire datacentre and we can monitor everything, measure everything, optimize across everything. We know where all the bottlenecks are. We're not guessing about it….we're delivering systems that perform at scale. And the reason why we know they perform at scale is because we built it all here.

“We know where we need to optimize with them, and we know where we have to help them improve their infrastructure to achieve the most performance. This deep intimate knowledge at the entire datacentre scale is fundamentally what sets us apart today.”

The ability to make world leading GPUs and the decade-long investment in CUDA are a key part of the Economic Moat possessed by Nvdia.

Training and Inference

LLMs must be trained and that is a good source of demand for GPUs.

“Training continues to scale as models learn to be multimodal, understanding text, speech, images, video and 3D and learn to reason and plan.”

Models only need to be trained once, and then periodically seasoned with more data. On the other hand, model inference is constantly required.

In Inference, trained models are tasked with constantly inferring new data from devices and providing predictions based on the best possible outcomes.

“Our inference workloads are growing incredibly. With generative AI, inference, which is now about fast token generation at massive scale, has become incredibly complex.”

“In our trailing four quarters, we estimate that inference drove about 40% of our Datacentre revenue. Both training and inference are growing significantly.”

“As generative AI makes its way into more consumer Internet applications, we expect to see continued growth opportunities as inference scales both with model complexity as well as with the number of users and number of queries per user, driving much more demand for AI compute.”

Chip Innovation

Chip Innovation is one of the key reasons that Nvidia has beaten the rest of the pack to become the leader in AI. The chart below shows how Nvidia has designed faster chips (as measured in teraflops or tFlops). A tflop is the ability of a computer system to perform one trillion floating-point calculations per second. Saying something has “6 TFLOPS,” for example, means that its processor setup can handle 6 trillion floating-point calculations every second, on average. The H100 has 4,000 tFlops while Blackwell will have 5X more.

The H200 (Hopper 200) is the most advanced chip currently being supplied. NVDA delivered the first H200 chip to OpenAI during the quarter. The recent OpenAI demo has gone viral online and showed the tremendous human-like capability of the latest Open AI LLM. This would not have been possible without the Nvidia H200.

“H200 nearly doubles the inference performance of H100, delivering significant value for production deployments.”

The water-cooled Blackwell, which was announced recently, is the next in line to be launched with shipments expected to start at the end of Q2 2024.

The Blackwell GPU trains models 4x faster than the H100 and is 30x more powerful in model inference. It lowers the TCO (Total Cost of Ownership) and energy cost by 25x thanks to features like water cooling at scale.

Jensen Huang said his competitors could give away their GPUs for free and people would still prefer Nvidia chips due to lower lifetime TCO.

The demand for Blackwell is likely to be very high.

“We have 100 different computer system configurations that are coming this year for Blackwell. And that is off the charts. ... And so, you're going to see liquid cooled version, air cooled version, x86 visions, Grace versions, so on and so forth.”

“Blackwell time-to-market customers include Amazon, Google, Meta, Microsoft, OpenAI, Oracle, Tesla, and xAI.”

“There's a whole bunch of systems that are being designed. And they're offered from all our ecosystem of great partners. The Blackwell platform has expanded our offering tremendously. The integration of CPUs and the much more compressed density of computing, liquid cooling is going to save datacentres a lot of money in provisioning power and not to mention to be more energy efficient.”

Blackwell is likely to see huge demand after its release later this year. However, Nvidia are already looking beyond Blackwell.

“Well, I can announce that after Blackwell, there's another chip. And we are on a one-year rhythm. And so, and you can also count that -- count on us having new networking technology on a very fast rhythm.”

The relentless innovation at Nvidia has been remarkable. While AMD and Intel may gain some market share (partly due to excess demand relative to supply faced by Nvidia), Nvidia is well placed to innovate and continue to offer the best, most efficient, products along with the leading software stack.

Super productivity of DGX server

The Nvidia DGX platform is a very comprehensive AI and data analytics solution designed to accelerate the development and deployment of AI models. It has hardware and software components (including GPUs and the CUDA toolkit) optimized for high-performance computing and deep learning tasks.

Models such as the open-source Llama 3 model can be hosted on DGX Servers by CSPs for millions of end users to use.

“Using Llama 3 with 700 billion parameters, a single NVIDIA HGX H200 server can deliver 24,000 tokens per second, supporting more than 2,400 users at the same time.”

“That means for every $1 spent on NVIDIA HGX H200 servers at current prices per token, an API provider serving Llama 3 tokens can generate $7 in revenue over four years.”

Automotive

GPU demand for cars will grow rapidly as EVs with better self-driving properties are introduced to the market. Nvidia is seeing significant growth in the automotive space.

“Automotive. Revenue was $329 million, up 17% sequentially and up 11% year-on-year. Sequential growth was driven by the ramp of AI cockpit solutions with global OEM customers and strength in our self-driving platforms. Year-on-year growth was driven primarily by self-driving.”

“We also announced a number of new design wins on NVIDIA DRIVE Thor, the successor to Orin, powered by the new NVIDIA Blackwell architecture with several leading EV makers, including BYD, XPeng, GAC's Aion Hyper and Neuro. DRIVE Thor is slated for production vehicles starting next year.”

“Video Transformers are enabling dramatically better autonomous driving capabilities and propelling significant growth for NVIDIA AI infrastructure across the automotive industry.”

“We expect automotive to be our largest enterprise vertical within Datacentre this year, driving a multibillion revenue opportunity across on-prem and cloud consumption.”

AI Factories

Nvidia refers to datacentres powered by their GPUs, hardware, software, and networking as the “AI Factory”.

“Large clusters like the ones built by Meta and Tesla are examples of the essential infrastructure for AI production, what we refer to as AI factories.”

“These next-generation datacentres host advanced full-stack accelerated computing platforms where the data comes in and intelligence comes out.”

“In Q1, we worked with over 100 customers building AI factories ranging in size from hundreds to tens of thousands of GPUs, with some reaching 100,000 GPUs.”

These new AI Datacentres are set to completely transform how computers will work and what they will produce.

“The next industrial revolution has begun. Companies and countries are partnering with NVIDIA to shift the trillion-dollar installed base of traditional datacentres to accelerated computing and build a new type of datacentre, AI factories, to produce a new commodity, artificial intelligence.”

“We are fundamentally changing how computing works and what computers can do, from general purpose CPU to GPU accelerated computing, from instruction-driven software to intention-understanding models, from retrieving information to performing skills, and at the industrial level, from producing software to generating tokens, manufacturing digital intelligence.”

“Longer term, we're completely redesigning how computers work. And this is a platform shift. The computer is no longer an instruction-driven only computer. It's an intention-understanding computer. And it understands, of course, the way we interact with it, but it also understands our meaning, what we intend that we asked it to do and it has the ability to reason, inference iteratively to process a plan and come back with a solution.”

“And so every aspect of the computer is changing in such a way that instead of retrieving prerecorded files, it is now generating contextually relevant intelligent answers. And so that's going to change computing stacks all over the world.”

This concludes our review of the earnings call and there are a few things we have not covered.

These include Gaming demand for CPUs, computer networking opportunities, the new GB200 superchip for supercomputers, Nvidia CPUs based on ARM Architecture and the launch of Nvidia Inference Microservices (NIM), all of which are likely to be sources of future revenue growth.

The Outlook for Demand

Currently Nvidia has a completely dominant market share It can sell everything it makes at a high margin. The company has indicated that supply constraints will last “well into next year.”

The company does not offer standalone GPUs but an entire technology stack including CUDA. This has a long track record of updating, optimising, and improving which reduces the total cost of ownership (TCO) over time.

CSP demand will continue to grow as the three large CSPs, plus Meta Platforms, are likely to make collective capital expenditures of $ 200bn for at least the next two years.

There are other sources of growing demand beyond the CSPs.

Countries want to set up Sovereign AI Datacentres to create LLMs based on their valued data which will serve their languages and cultures.

The growth in EVs and self-driving cars is likely to continue, and this will lead to higher demand for Nvidia products.

Summary

· Nvidia has delivered a remarkably strong set of quarterly numbers driven by datacentre growth.

· Demand looks set to be strong and is likely to remain ahead of supply for some time. Demand will be driven by Datacentre asH200 and Blackwell supplies ramp up, the Automotive sector and Sovereign AI investments ,

· The company has a dominant position, and competitors are unlikely to take meaningful market share in the foreseeable future.

· New better products and faster innovation are likely to increase the strength of Nvidia’s moat.

Valuation

The huge run in Nvidia’s share price (up 282% in the last year) has understandably led to comments that it is now a bubble. This may be correct, but I hope we have shown that this price move reflects greatly improved fundamentals.

Nvidia currently trades at a earnings multiple 40X of 1-yar forward analysts’ estimated earnings, which implies a forward earnings yield of just 2.5%

Nvidia currently trades at an estimated historic Price to Free Cash Flow ratio of 74 which implies a forward Free Cash flow yield of just 1.3 %. This is misleadingly low as Free Cash flow is likely to grow strongly in the next two years.

It would be hard to suggest that the stock is cheap at these levels but the real question is what is the correct valuation for a stock with Nvidia’s economic prospects and profitability (ROE of 115% and ROCE of 96%- see chart below)?

Let us consider a simple back of the envelope calculation.

Nvidia’s FY 2025 revenue is expected to be $120 billion. If we assume revenue growth of 25%, the full year revenue will be $234bn in 2028.

We assume the net profit margin in FY 2028 will be 50% compared with the current 57%.

This implies a net profit of $ 117bn and an EPS of $ 47 in FY 2028.

With a 2028 P/E Ratio of 30X, we have a $1410 price target for the end year 2028, indicating 25% upside potential from the current price.

This implies an 8% annualized return for the next 3 years. This is not an adequate return but it is likely that our assumptions have been too conservative.

We will supplement this calculation with a DCF Model

This has several assumptions including the following:

· FY 25 Revenue growth - 96%. (Analysts’ consensus is

· FY 26 Revenue growth - 31%. (Analysts’ consensus is

· FY 27 and FY 29 Revenue growth -25 %

· FY 25 to FY 29 Net Margins = 55.25% ( current margin is 58%)

· Weighted Average Cost of Capital (WACC) is 11.5% .

· Exit P/E multiple in FY 29 us assumed to be 35X. (Current is 40X )

These and other assumptions lead to an estimated valuation of the Nvidia share of $ 1060 which is 7.7% below the current share price. This suggest Nvidia shares are currently slightly overvalued.

However, the well known problem with these models is that relatively small changes in the inputs can result in large variations in the outputs.

Conclusions

Cecil Rhodes famously made his money in the South African mining boom not by prospecting for gold but by selling shovels and supplies to the thousands prepared to take the various risks involved prospecting for Gold.

NVIDIA is the dominant shovel and services supplier to the AI Gold Rush.

At some point, customers will have all the Datacentre capacity they need, and demand could fall off a cliff. However, that is at least two years away. Nvidia is likely to grow larger in the meantime.

Given the outstanding business prospects and the strong moat that Nvidia has as well as its very high profitability, we are disinclined to sell the stock.

We do not feel it is prudent to add to our position, but we will continue to maintain our existing holding.