Nvidia (NVDA)

Jensen Huang's keynote speech at the GTC 2024.

I listened to the above speech, which was scheduled for two hours starting 1.30 pm San Jose, California time. The problem is I am currently in Ahmedabad, India which is 12 hours ahead. The speech was amazing and very much worth staying up for. It was at Nvidia's annual developers’ conference, and a lot of the stuff was pretty technical and way above my understanding.

The main item was the introduction of the new highly anticipated Blackwell GPU. It is the successor to the very popular H100 and H200 GPUs which are part of the Hopper Series. These have driven the huge sales surge that NVDA has seen in the last two years. The quarterly sales rate in 2024 is almost the same as their annual sales in 2022, mainly due to demand from cloud hyperscalers, building out vast datacentres.

“Generative AI is the defining technology of our time. Blackwell GPUs are the engine to power this new industrial revolution. Working with the most dynamic companies in the world, we will realize the promise of AI for every industry.”

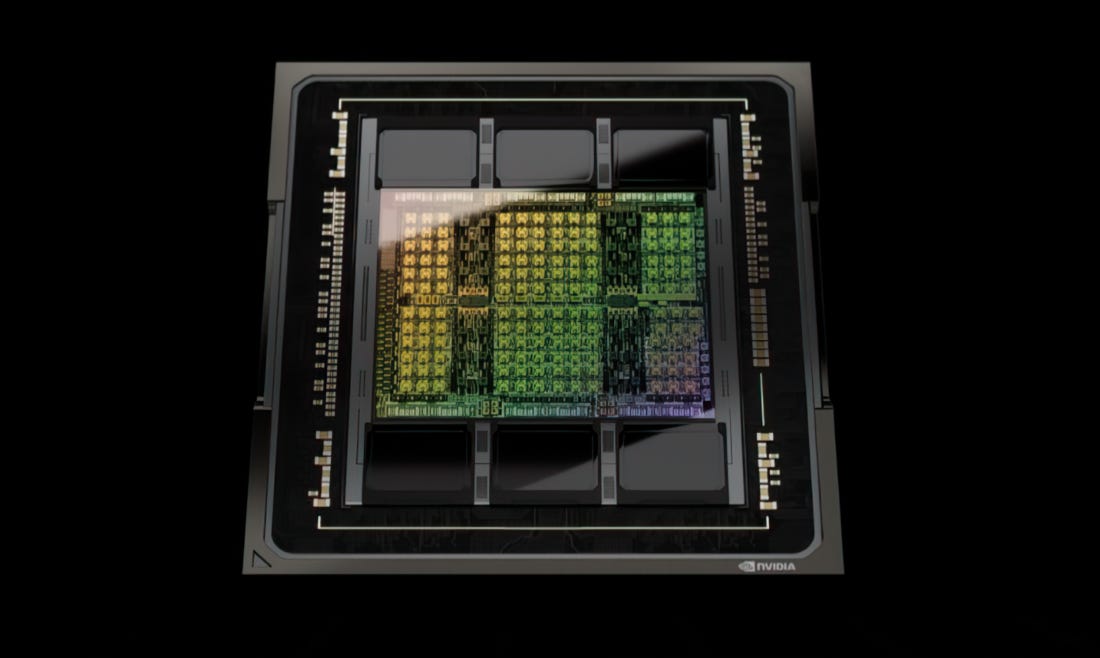

Blackwell GPU

The Blackwell GPU will be available as a standalone GPU, or two Blackwell GPUs can be combined and paired with Nvidia’s Grace CPU to create the GB200 Superchip. The Blackwell has 208bn transistors.

“Blackwell is not a Chip; it is a platform.”

Blackwell offers up to a 30x performance increase, compared with the H100 GPU for LLM inference workloads while, most importantly, using up to 25x less energy.

Many of Nvidia’s biggest customers are currently developing their own AI chips, partly because the Hopper series’s power consumption was too high.

The hyperscalers will be the first companies to start offering access to Blackwell chips through their cloud platforms. The companies mentioned as working very closely with NVDA included Amazon, Microsoft, Google, Oracle, Ansys, Cadence, Synopsys and Snowflake.

“People think we make GPUs, …we do but they do not look like GPUs.”

Blackwell is completely compatible with Hopper, so people just replace one with the other in their servers and nothing else needs to be done.

Jensen also announced the DGX SuperPOD supercomputer system. This made up of eight or more GB200 systems. It has 36 Grace Blackwell 200 Superchips paired to run as a single computer. Nvidia says customers can scale up the SuperPOD to support tens of thousands of GB200 Superchips depending on their needs.

The DGX SuperPOD

The DGX SuperPOD features liquid-cooled rack-scale architecture, which means the system is cooled off using fluid circulating through a series of pipes and radiators rather than fan-based air cooling, which can be less efficient and more energy intensive. Huang mentioned 2 litres a second will flow through the system. Water flows in at room temperature and flows out at the temperature of sauna water.

The Blackwell GPU and GB200 Superchip will be Nvidia’s flagship products for AI training and inferencing and are likely to see huge demand as soon as the order book opens.

“The rate at which we are advancing computing is insane, but it is still not enough, so we invented another chip.”

This is the NVLink, which itself has 50bn transistors, the same as the H100. Four of the NVLinks packaged together in one chip, will allow every GPU to talk to every other GPU in the datacentre at full speed.

The call it the DGX and it is an exaflop computer system in a rack.

Definition: An exaflop is a measure of performance for a supercomputer that can calculate at least 1018 or one quintillion floating-point operations per second. Floating point refers to calculations made where all the numbers are expressed with decimal points.

This computer will become the most powerful for AI LLM training and inferencing.

They also announced a service called NIM. This is a software that contains a large number of pre-trained models with the appropriate CUDA software. Everybody will be able to download it from Nvidia and run it on to their data to create their own LLMs. In this way, they believe Nvidia will come the dominant AI Foundry, in the same way as TSMC is the dominant advanced chip foundry.

They also talked about a simulation engine which would run on a new computer called the OVX and which will operate in something called the Omniverse. I think this is a side reference to the Metaverse pioneered by Mark Zuckerburg.

This will allow companies to create digital twins of factories or warehouses they wish to build, before they build them. These can be simulated in working form to test the full design virtually. A lot of lessons can be learned to improve the design before the physical building begins. The Apple Vision-Pro can be linked to this so the user can “walk through” their virtual factory or warehouse.

They also talked about Project Groot which is a general-purpose software model for training robots. Huang was joined on stage with some impressive robots.

Conclusion

This was a long technical talk from Nvidia showcasing their new technology. We only understood a small fraction of it. People say it is only a question of time before Intel and AMD develop new chips to more effectively challenge Nvidia’s GPUs. That may be the case. However, this talk showed that Nvidia is not standing still and is developing more cutting-edge technology at an astonishing pace.